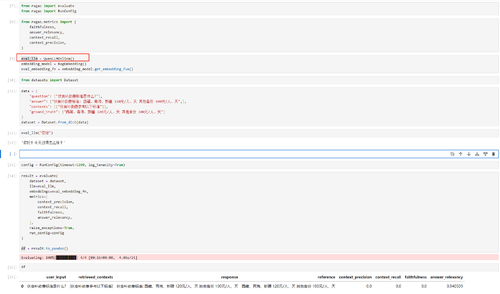

传参config时发送set_run_config错误

Model.py QwenLLM定义

class QwenLLM(LLM):

client: Optional[Any] = None

def __init__(self):

super().__init__()

self.client = OpenAI(

# 若没有配置环境变量,请用百炼API Key将下行替换为:api_key="sk-xxx",

api_key=,

base_url=

)

def _call(self,

prompt: str,

stop: Optional[List[str]] = None,

run_manager: Optional[CallbackManagerForLLMRun] = None,

model="qwen2.5-72b-instruct",

**kwargs: Any):

completion = self.client.chat.completions.create(model=model,

messages=prompt,

temperature=kwargs.get('temperature', 0.1),

top_p=kwargs.get('top_p', 0.9),

max_tokens=kwargs.get('max_tokens', 4096),

stream=kwargs.get('stream', False))

return completion.choices[0].message.content

@property

def _llm_type(self) -> str:

return "qwen2.5-72b-instruct"

评估模型

result = evaluate(

dataset = dataset,

llm=eval_llm,

embeddings=eval_embedding_fn,

metrics=[

context_precision,

context_recall,

faithfulness,

answer_relevancy,

],

raise_exceptions=True,

run_config=config

)

df = result.to_pandas()

报错

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

Cell In[19], line 1

----> 1 result = evaluate(

2 dataset = dataset,

3 llm=eval_llm,

4 embeddings=eval_embedding_fn,

5 metrics=[

6 context_precision,

7 context_recall,

8 faithfulness,

9 answer_relevancy,

10 ],

11 raise_exceptions=True,

12 run_config=config

13 )

15 df = result.to_pandas()

File D:\Anaconda\envs\ai\lib\site-packages\ragas\_analytics.py:227, in track_was_completed.<locals>.wrapper(*args, **kwargs)

224 @wraps(func)

225 def wrapper(*args: P.args, **kwargs: P.kwargs) -> t.Any:

226 track(IsCompleteEvent(event_type=func.__name__, is_completed=False))

--> 227 result = func(*args, **kwargs)

228 track(IsCompleteEvent(event_type=func.__name__, is_completed=True))

230 return result

File D:\Anaconda\envs\ai\lib\site-packages\ragas\evaluation.py:216, in evaluate(dataset, metrics, llm, embeddings, experiment_name, callbacks, run_config, token_usage_parser, raise_exceptions, column_map, show_progress, batch_size, _run_id, _pbar)

213 answer_correctness_is_set = i

215 # init all the models

--> 216 metric.init(run_config)

218 executor = Executor(

219 desc="Evaluating",

220 keep_progress_bar=True,

(...)

225 pbar=_pbar,

226 )

228 # Ragas Callbacks

229 # init the callbacks we need for various tasks

File D:\Anaconda\envs\ai\lib\site-packages\ragas\metrics\base.py:227, in MetricWithLLM.init(self, run_config)

223 if self.llm is None:

224 raise ValueError(

225 f"Metric '{self.name}' has no valid LLM provided (self.llm is None). Please initantiate a the metric with an LLM to run." # noqa

226 )

--> 227 self.llm.set_run_config(run_config)

File D:\Anaconda\envs\ai\lib\site-packages\pydantic\_internal\_model_construction.py:264, in ModelMetaclass.__getattr__(self, item)

262 if private_attributes and item in private_attributes:

263 return private_attributes[item]

--> 264 raise AttributeError(item)

AttributeError: set_run_config

557

收起