关于线性回归中归一化处理和不对归一化处理的问题

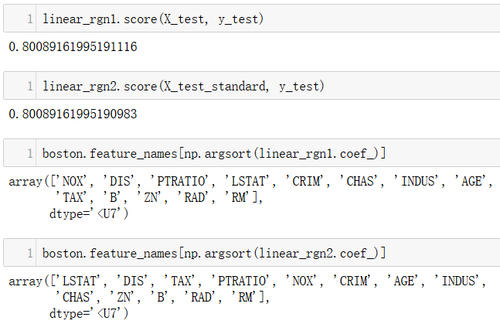

import numpy as np from sklearn.model_selection import GridSearchCV from sklearn.preprocessing import StandardScaler from sklearn.linear_model import LinearRegression from sklearn.model_selection import train_test_split from sklearn import datasets boston = datasets.load_boston() X = boston.data y = boston.target X = X[y<50] y = y[y<50] X_train, X_test, y_train, y_test = train_test_split(X, y, random_state = 666) scaler = StandardScaler() scaler.fit(X_train) X_train_standard = scaler.transform(X_train) X_test_standard = scaler.transform(X_test) linear_rgn1 = LinearRegression() linear_rgn2 = LinearRegression() linear_rgn1.fit(X_train, y_train) linear_rgn2.fit(X_train_standard, y_train) linear_rgn1.score(X_test, y_test) linear_rgn2.score(X_test_standard, y_test) #可以看到score是差不多的 ################################################## boston.feature_names[np.argsort(linear_rgn1.coef_)] boston.feature_names[np.argsort(linear_rgn2.coef_)] #但是对得到的系数进行排序后,却发现很大不同 #那我应该相信归一化处理的还是不进行归一化处理的结果呢?

我记得老师好像在课程中说过,可以不进行归一化,因为得到的参数会有所调整,老师在课程中也没有进行归一化。那对于这个结果该如何解释呢?

4337

收起