scrapy-redis报错TypeError: can't pickle Selector objects

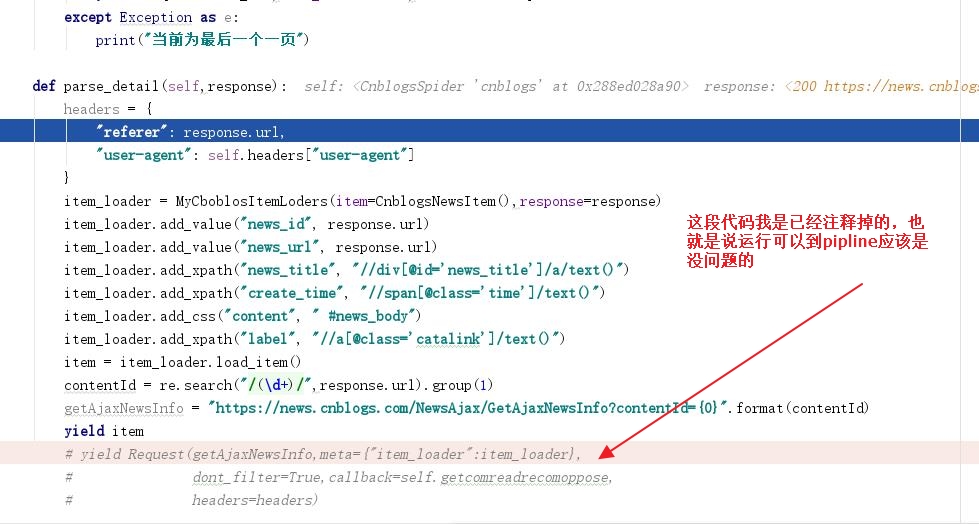

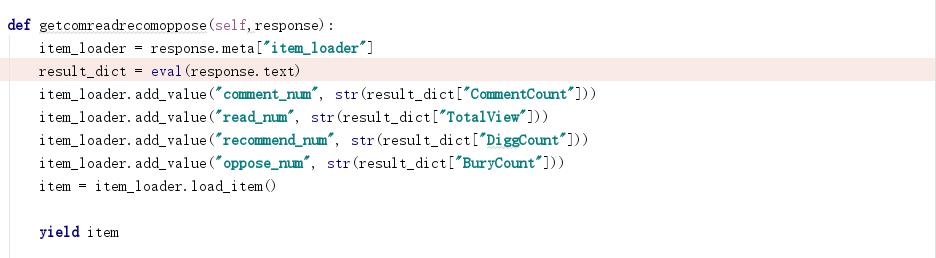

当我把第一张图片注释的地方启用起来,就会报错,而且不回经过第二张图片的函数中,也进不到pipline,报错如下:

Unhandled error in Deferred:

2020-02-10 20:43:00 [twisted] CRITICAL: Unhandled error in Deferred:

Traceback (most recent call last):

File “E:\Env\scra_redis\lib\site-packages\twisted\internet\base.py”, line 1283, in run

self.mainLoop()

File “E:\Env\scra_redis\lib\site-packages\twisted\internet\base.py”, line 1292, in mainLoop

self.runUntilCurrent()

File “E:\Env\scra_redis\lib\site-packages\twisted\internet\base.py”, line 913, in runUntilCurrent

call.func(*call.args, **call.kw)

File “E:\Env\scra_redis\lib\site-packages\twisted\internet\task.py”, line 671, in _tick

taskObj._oneWorkUnit()

— —

File “E:\Env\scra_redis\lib\site-packages\twisted\internet\task.py”, line 517, in _oneWorkUnit

result = next(self._iterator)

File “E:\Env\scra_redis\lib\site-packages\scrapy\utils\defer.py”, line 63, in

work = (callable(elem, *args, **named) for elem in iterable)

File “E:\Env\scra_redis\lib\site-packages\scrapy\core\scraper.py”, line 184, in _process_spidermw_output

self.crawler.engine.crawl(request=output, spider=spider)

File “E:\Env\scra_redis\lib\site-packages\scrapy\core\engine.py”, line 210, in crawl

self.schedule(request, spider)

File “E:\Env\scra_redis\lib\site-packages\scrapy\core\engine.py”, line 216, in schedule

if not self.slot.scheduler.enqueue_request(request):

File “E:\Env\scra_redis\lib\site-packages\scrapy_redis\scheduler.py”, line 167, in enqueue_request

self.queue.push(request)

File “E:\Env\scra_redis\lib\site-packages\scrapy_redis\queue.py”, line 99, in push

data = self._encode_request(request)

File “E:\Env\scra_redis\lib\site-packages\scrapy_redis\queue.py”, line 43, in _encode_request

return self.serializer.dumps(obj)

File “E:\Env\scra_redis\lib\site-packages\scrapy_redis\picklecompat.py”, line 14, in dumps

return pickle.dumps(obj, protocol=-1)

File “E:\Env\scra_redis\lib\site-packages\parsel\selector.py”, line 204, in getstate

raise TypeError(“can’t pickle Selector objects”)

builtins.TypeError: can’t pickle Selector objects

2020-02-10 20:43:00 [twisted] CRITICAL:

Traceback (most recent call last):

File “E:\Env\scra_redis\lib\site-packages\twisted\internet\task.py”, line 517, in _oneWorkUnit

result = next(self._iterator)

File “E:\Env\scra_redis\lib\site-packages\scrapy\utils\defer.py”, line 63, in

work = (callable(elem, *args, **named) for elem in iterable)

File “E:\Env\scra_redis\lib\site-packages\scrapy\core\scraper.py”, line 184, in _process_spidermw_output

self.crawler.engine.crawl(request=output, spider=spider)

File “E:\Env\scra_redis\lib\site-packages\scrapy\core\engine.py”, line 210, in crawl

self.schedule(request, spider)

File “E:\Env\scra_redis\lib\site-packages\scrapy\core\engine.py”, line 216, in schedule

if not self.slot.scheduler.enqueue_request(request):

File “E:\Env\scra_redis\lib\site-packages\scrapy_redis\scheduler.py”, line 167, in enqueue_request

self.queue.push(request)

File “E:\Env\scra_redis\lib\site-packages\scrapy_redis\queue.py”, line 99, in push

data = self._encode_request(request)

File “E:\Env\scra_redis\lib\site-packages\scrapy_redis\queue.py”, line 43, in _encode_request

return self.serializer.dumps(obj)

File “E:\Env\scra_redis\lib\site-packages\scrapy_redis\picklecompat.py”, line 14, in dumps

return pickle.dumps(obj, protocol=-1)

File “E:\Env\scra_redis\lib\site-packages\parsel\selector.py”, line 204, in getstate

raise TypeError(“can’t pickle Selector objects”)

TypeError: can’t pickle Selector objects

正在回答 回答被采纳积分+3

1回答

- 参与学习 5831 人

- 解答问题 6293 个

带你彻底掌握Scrapy,用Django+Elasticsearch搭建搜索引擎

了解课程