kubectl top nodes查询不到两个以上节点的状态

当我纳管第三个节点的时候,云端显示成功纳管,但是kubectl top nodes的时候,会将之前存在的某个节点挤掉

这是我纳入edge02之后的情况,会将testing123挤掉

root@hecs-411543:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

edge02 Ready agent,edge 39h v1.19.3-kubeedge-v1.8.2

imooc-edge01 Ready agent,edge 9d v1.19.3-kubeedge-v1.8.2

master Ready control-plane,master 9d v1.21.6

testing123 Ready agent,edge 4d23h v1.19.3-kubeedge-v1.8.2

root@hecs-411543:~# kubectl top nodes

W0417 16:54:56.706179 14684 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

edge02 41m 2% 291Mi 15%

imooc-edge01 11m 0% 689Mi 36%

master 106m 5% 2055Mi 53%

testing123 <unknown> <unknown> <unknown> <unknown>

当我对testing123重新用token纳管的时候,可以纳管这个节点,仍然无法获得edge02的信息了。

root@hecs-411543:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

edge02 Ready agent,edge 40h v1.19.3-kubeedge-v1.8.2

imooc-edge01 Ready agent,edge 9d v1.19.3-kubeedge-v1.8.2

master Ready control-plane,master 9d v1.21.6

testing123 Ready agent,edge 4d23h v1.19.3-kubeedge-v1.8.2

root@hecs-411543:~# kubectl top nodes

W0417 17:00:53.731812 18978 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

imooc-edge01 17m 0% 690Mi 36%

master 114m 5% 2054Mi 53%

testing123 18m 0% 275Mi 14%

edge02 <unknown> <unknown> <unknown> <unknown>

确保streampot和tunelport已经开启,而且运行着metrics。

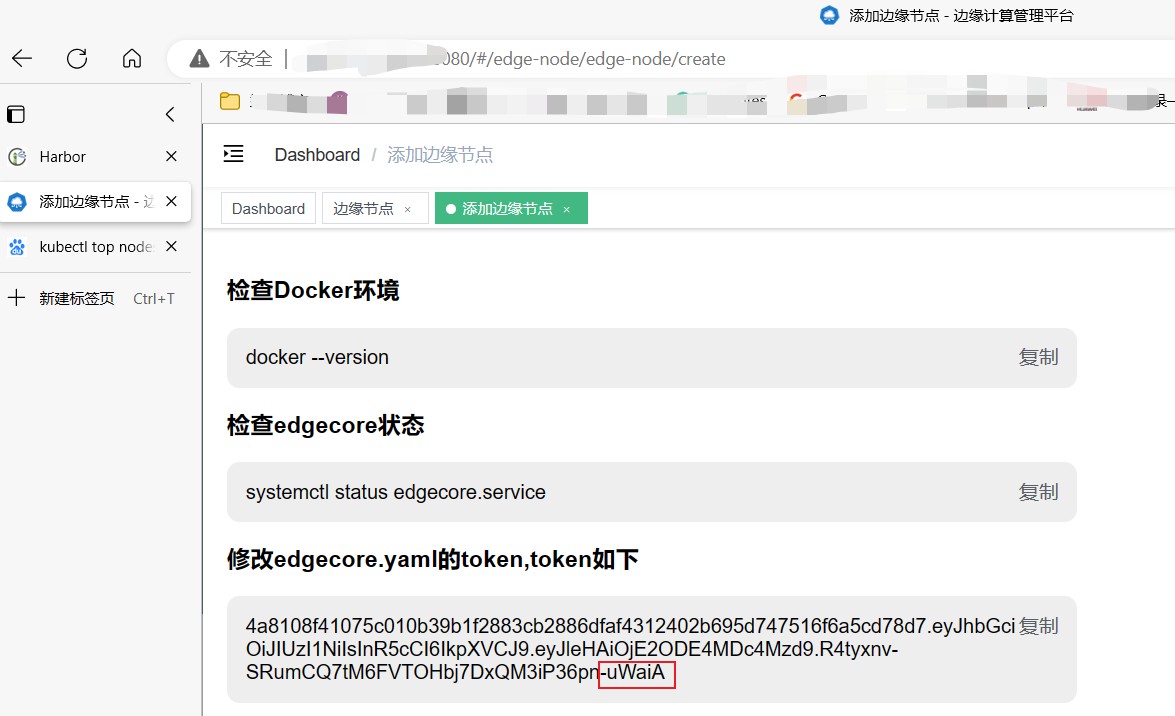

root@hecs-411543:~# keadm gettoken

4a8108f41075c010b39b1f2883cb2886dfaf4312402b695d747516f6a5cd78d7.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2ODE4MDc4Mzd9.R4tyxnv-SRumCQ7tM6FVTOHbj7DxQM3iP36pn-uWaiA

检查过了,网页上给的token和在云端执行的keadm gettoken是一致的。

查看metric是否开启,我这里把一些kube-system无关项删掉了,减少篇幅

root@hecs-411543:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

ec-dashboard ec-dashboard-79476654c6-v6j6j 1/1 Running 1 145m

ec-dashboard ec-dashboard-web-64989b9876-5kck2 1/1 Running 3 145m

kube-system metrics-server-56b859787d-xf959 1/1 Running 2 8d

我的metric的yaml文件如下。

root@hecs-411543:~/metrics_install# cat deploy.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/master

operator: Exists

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

hostNetwork: true

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

# 跳过tls校验

- --kubelet-insecure-tls

image: bitnami/metrics-server:0.4.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

periodSeconds: 10

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

1248

收起