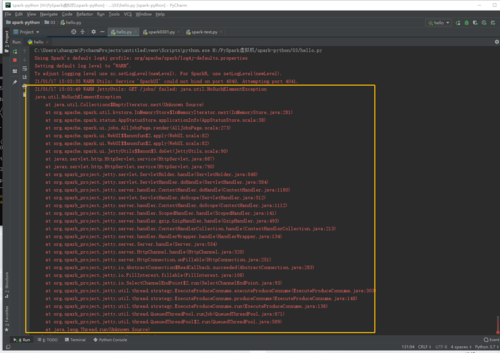

执行的是这个代码,是java环境不对吗,我已经配置了Java环境啊

from pyspark import SparkConf, SparkContext

conf = SparkConf().setMaster("local[1]").setAppName("spark1")

sc = SparkContext(conf=conf)

data = [1,2,3,4,5]

disData = sc.parallelize(data)

print(disData.collect())

sc.stop()