java.lang.NoSuchMethodError错误

老师您好,按照您说的配置了一遍,4-9中的TestApp能够通过,但是在这一章的时候出现了这个错误,找了很长时间不知道是哪里的问题,只能过来求助了…

Exception in thread “dag-scheduler-event-loop” java.lang.NoSuchMethodError: org.apache.hadoop.mapreduce.InputSplit.getLocationInfo()[Lorg/apache/hadoop/mapred/SplitLocationInfo;

20/03/26 12:38:49 INFO state.StateStoreCoordinatorRef: Registered StateStoreCoordinator endpoint

This is Imooc Spark Project...

20/03/26 12:38:50 INFO memory.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 165.8 KB, free 1948.9 MB)

20/03/26 12:38:50 INFO memory.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 16.7 KB, free 1948.9 MB)

20/03/26 12:38:50 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on hadoop000:42985 (size: 16.7 KB, free: 1949.1 MB)

20/03/26 12:38:50 INFO spark.SparkContext: Created broadcast 0 from wholeTextFiles at CustomDatasourceRelation.scala:81

20/03/26 12:38:50 INFO codegen.CodeGenerator: Code generated in 157.36685 ms

20/03/26 12:38:51 INFO codegen.CodeGenerator: Code generated in 14.523292 ms

20/03/26 12:38:51 INFO input.FileInputFormat: Total input paths to process : 1

20/03/26 12:38:51 INFO input.FileInputFormat: Total input paths to process : 1

20/03/26 12:38:51 INFO spark.SparkContext: Starting job: show at Demo.scala:13

20/03/26 12:38:51 INFO scheduler.DAGScheduler: Got job 0 (show at Demo.scala:13) with 1 output partitions

20/03/26 12:38:51 INFO scheduler.DAGScheduler: Final stage: ResultStage 0 (show at Demo.scala:13)

20/03/26 12:38:51 INFO scheduler.DAGScheduler: Parents of final stage: List()

20/03/26 12:38:51 INFO scheduler.DAGScheduler: Missing parents: List()

20/03/26 12:38:51 INFO scheduler.DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[8] at show at Demo.scala:13), which has no missing parents

Exception in thread "dag-scheduler-event-loop" java.lang.NoSuchMethodError: org.apache.hadoop.mapreduce.InputSplit.getLocationInfo()[Lorg/apache/hadoop/mapred/SplitLocationInfo;

at org.apache.spark.rdd.NewHadoopRDD.getPreferredLocations(NewHadoopRDD.scala:310)

at org.apache.spark.rdd.RDD$$anonfun$preferredLocations$2.apply(RDD.scala:275)

at org.apache.spark.rdd.RDD$$anonfun$preferredLocations$2.apply(RDD.scala:275)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.preferredLocations(RDD.scala:274)

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal(DAGScheduler.scala:2005)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply$mcVI$sp(DAGScheduler.scala:2016)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2013)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal(DAGScheduler.scala:2013)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply$mcVI$sp(DAGScheduler.scala:2016)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2013)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal(DAGScheduler.scala:2013)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply$mcVI$sp(DAGScheduler.scala:2016)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2013)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal(DAGScheduler.scala:2013)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply$mcVI$sp(DAGScheduler.scala:2016)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2013)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal(DAGScheduler.scala:2013)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply$mcVI$sp(DAGScheduler.scala:2016)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2013)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal(DAGScheduler.scala:2013)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply$mcVI$sp(DAGScheduler.scala:2016)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2013)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal(DAGScheduler.scala:2013)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply$mcVI$sp(DAGScheduler.scala:2016)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2013)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal(DAGScheduler.scala:2013)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply$mcVI$sp(DAGScheduler.scala:2016)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2$$anonfun$apply$1.apply(DAGScheduler.scala:2015)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2015)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal$2.apply(DAGScheduler.scala:2013)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$getPreferredLocsInternal(DAGScheduler.scala:2013)

at org.apache.spark.scheduler.DAGScheduler.getPreferredLocs(DAGScheduler.scala:1979)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$17.apply(DAGScheduler.scala:1112)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$17.apply(DAGScheduler.scala:1110)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at scala.collection.IterableLike$class.foreach(IterableLike.scala:72)

at scala.collection.AbstractIterable.foreach(Iterable.scala:54)

at scala.collection.TraversableLike$class.map(TraversableLike.scala:234)

at scala.collection.AbstractTraversable.map(Traversable.scala:104)

at org.apache.spark.scheduler.DAGScheduler.submitMissingTasks(DAGScheduler.scala:1110)

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$submitStage(DAGScheduler.scala:1069)

at org.apache.spark.scheduler.DAGScheduler.handleJobSubmitted(DAGScheduler.scala:1013)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2067)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2059)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2048)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

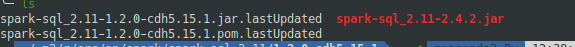

另外,我本地的maven仓库变成这样了

1751

收起