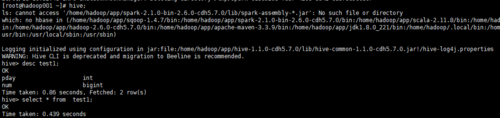

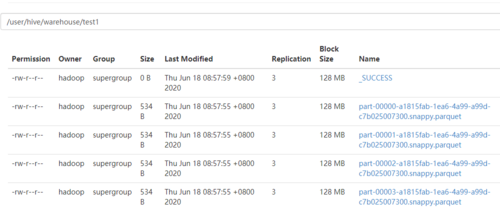

scala建表,hive查询为空

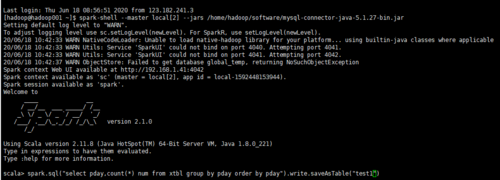

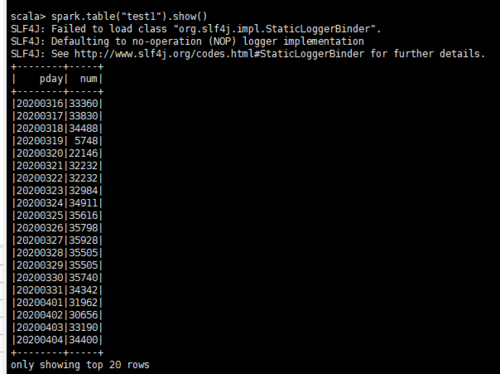

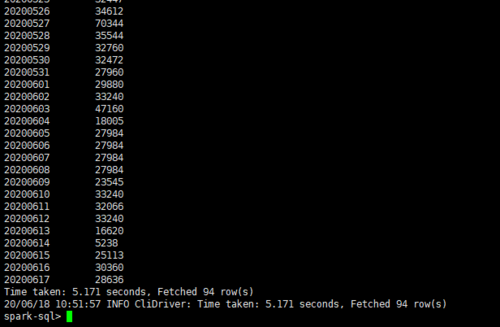

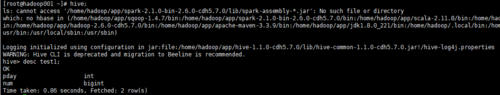

老师您好,我用scala命令spark.sql(“select pday,count(*) num from xtbl group by pday order by pday”).write.saveAsTable(“test1”)新建了一张test1表。之后用scala命令spark.table(“test1”).show()和sparksql都可以查到插入的数据内容。但是在hive里select查询出结果为空。

1301

收起