java操作创建 目录是可以的的,但是读取文件,上传文件就不行了

老师,那个java操作,创建目录是可以的,但是 读hdfs 文件内容 读不下来,还有上传到 hdfs文件,我用的是阿里云的centos7.2搭建的 Hadoop cdh 2.60版本。jar包和

安装的hadoop版本一致。且启动节点和端口都是正常的。

1、

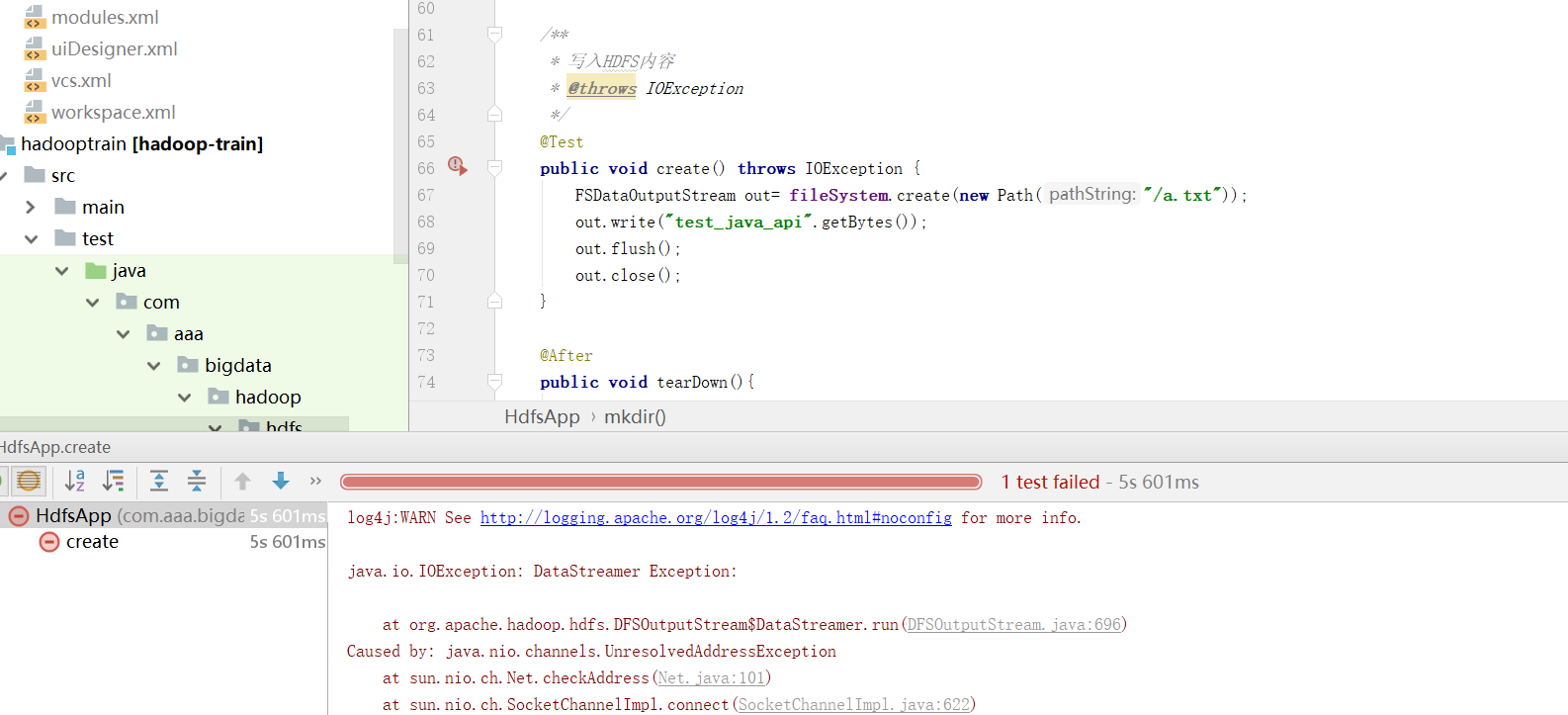

这里执行创建的操作,在 hdfs根目录可以看到 a.txt 文件但是无内容

FSDataOutputStream out= fileSystem.create(new Path("/a.txt"));

out.write(“test_java_api”.getBytes());

out.flush();

out.close();

出现异常:

org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /a.txt could only be replicated to 0 nodes instead of minReplication (=1). There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

2、百度说,加上下面这句话

configuration.set(“dfs.client.use.datanode.hostname”, “true”);//让可以使用主机名传参数

但是:会报新的异常

java.io.IOException: DataStreamer Exception:

atorg.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:696)

Caused by: java.nio.channels.UnresolvedAddressException

at sun.nio.ch.Net.checkAddress(Net.java:101)

at sun.nio.ch.SocketChannelImpl.connect(SocketChannelImpl.java:622)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:192)at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:530)

atorg.apache.hadoop.hdfs.DFSOutputStream.createSocketForPipeline(DFSOutputStream.java:1610)

atorg.apache.hadoop.hdfs.DFSOutputStreamDataStreamer.createBlockOutputStream(DFSOutputStream.java:1408)atorg.apache.hadoop.hdfs.DFSOutputStreamDataStreamer.createBlockOutputStream(DFSOutputStream.java:1408)

atorg.apache.hadoop.hdfs.DFSOutputStreamDataStreamer.createBlockOutputStream(DFSOutputStream.java:1408)atorg.apache.hadoop.hdfs.DFSOutputStreamDataStreamer.nextBlockOutputStream(DFSOutputStream.java:1361) atorg.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:588)