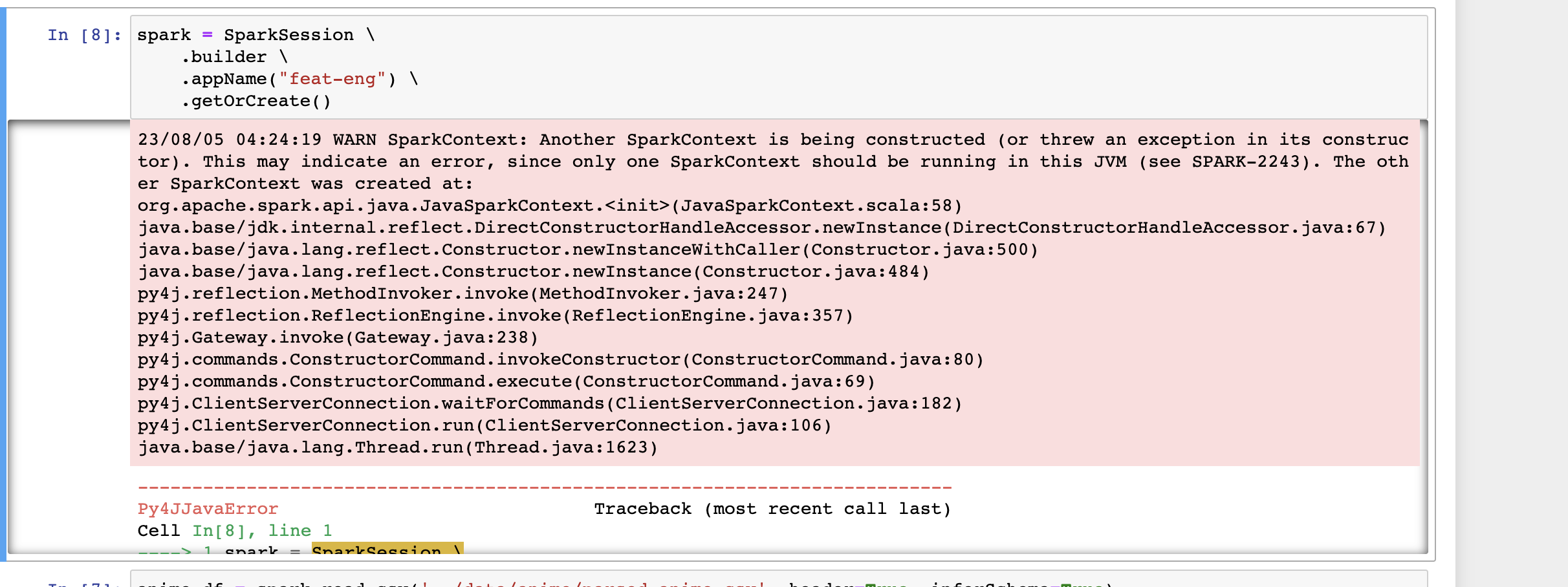

Py4JJavaError 特征处理 运行时报错

特征处理 运行时报错

详细报错

---------------------------------------------------------------------------

Py4JJavaError Traceback (most recent call last)

Cell In[8], line 1

----> 1 spark = SparkSession \

2 .builder \

3 .appName("feat-eng") \

4 .getOrCreate()

File ~/anaconda3/lib/python3.8/site-packages/pyspark/sql/session.py:228, in SparkSession.Builder.getOrCreate(self)

226 sparkConf.set(key, value)

227 # This SparkContext may be an existing one.

--> 228 sc = SparkContext.getOrCreate(sparkConf)

229 # Do not update `SparkConf` for existing `SparkContext`, as it's shared

230 # by all sessions.

231 session = SparkSession(sc)

File ~/anaconda3/lib/python3.8/site-packages/pyspark/context.py:392, in SparkContext.getOrCreate(cls, conf)

390 with SparkContext._lock:

391 if SparkContext._active_spark_context is None:

--> 392 SparkContext(conf=conf or SparkConf())

393 return SparkContext._active_spark_context

File ~/anaconda3/lib/python3.8/site-packages/pyspark/context.py:146, in SparkContext.__init__(self, master, appName, sparkHome, pyFiles, environment, batchSize, serializer, conf, gateway, jsc, profiler_cls)

144 SparkContext._ensure_initialized(self, gateway=gateway, conf=conf)

145 try:

--> 146 self._do_init(master, appName, sparkHome, pyFiles, environment, batchSize, serializer,

147 conf, jsc, profiler_cls)

148 except:

149 # If an error occurs, clean up in order to allow future SparkContext creation:

150 self.stop()

File ~/anaconda3/lib/python3.8/site-packages/pyspark/context.py:209, in SparkContext._do_init(self, master, appName, sparkHome, pyFiles, environment, batchSize, serializer, conf, jsc, profiler_cls)

206 self.environment["PYTHONHASHSEED"] = os.environ.get("PYTHONHASHSEED", "0")

208 # Create the Java SparkContext through Py4J

--> 209 self._jsc = jsc or self._initialize_context(self._conf._jconf)

210 # Reset the SparkConf to the one actually used by the SparkContext in JVM.

211 self._conf = SparkConf(_jconf=self._jsc.sc().conf())

File ~/anaconda3/lib/python3.8/site-packages/pyspark/context.py:329, in SparkContext._initialize_context(self, jconf)

325 def _initialize_context(self, jconf):

326 """

327 Initialize SparkContext in function to allow subclass specific initialization

328 """

--> 329 return self._jvm.JavaSparkContext(jconf)

File ~/anaconda3/lib/python3.8/site-packages/py4j/java_gateway.py:1585, in JavaClass.__call__(self, *args)

1579 command = proto.CONSTRUCTOR_COMMAND_NAME +\

1580 self._command_header +\

1581 args_command +\

1582 proto.END_COMMAND_PART

1584 answer = self._gateway_client.send_command(command)

-> 1585 return_value = get_return_value(

1586 answer, self._gateway_client, None, self._fqn)

1588 for temp_arg in temp_args:

1589 temp_arg._detach()

File ~/anaconda3/lib/python3.8/site-packages/py4j/protocol.py:326, in get_return_value(answer, gateway_client, target_id, name)

324 value = OUTPUT_CONVERTER[type](answer[2:], gateway_client)

325 if answer[1] == REFERENCE_TYPE:

--> 326 raise Py4JJavaError(

327 "An error occurred while calling {0}{1}{2}.\n".

328 format(target_id, ".", name), value)

329 else:

330 raise Py4JError(

331 "An error occurred while calling {0}{1}{2}. Trace:\n{3}\n".

332 format(target_id, ".", name, value))

Py4JJavaError: An error occurred while calling None.org.apache.spark.api.java.JavaSparkContext.

: java.lang.NoClassDefFoundError: Could not initialize class org.apache.spark.storage.StorageUtils$

at org.apache.spark.storage.BlockManagerMasterEndpoint.<init>(BlockManagerMasterEndpoint.scala:110)

at org.apache.spark.SparkEnv$.$anonfun$create$9(SparkEnv.scala:348)

at org.apache.spark.SparkEnv$.registerOrLookupEndpoint$1(SparkEnv.scala:287)

at org.apache.spark.SparkEnv$.create(SparkEnv.scala:336)

at org.apache.spark.SparkEnv$.createDriverEnv(SparkEnv.scala:191)

at org.apache.spark.SparkContext.createSparkEnv(SparkContext.scala:277)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:460)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at java.base/jdk.internal.reflect.DirectConstructorHandleAccessor.newInstance(DirectConstructorHandleAccessor.java:67)

at java.base/java.lang.reflect.Constructor.newInstanceWithCaller(Constructor.java:500)

at java.base/java.lang.reflect.Constructor.newInstance(Constructor.java:484)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.ClientServerConnection.waitForCommands(ClientServerConnection.java:182)

at py4j.ClientServerConnection.run(ClientServerConnection.java:106)

at java.base/java.lang.Thread.run(Thread.java:1623)

Caused by: java.lang.ExceptionInInitializerError: Exception java.lang.IllegalAccessError: class org.apache.spark.storage.StorageUtils$ (in unnamed module @0x3f025b6b) cannot access class sun.nio.ch.DirectBuffer (in module java.base) because module java.base does not export sun.nio.ch to unnamed module @0x3f025b6b [in thread "Thread-2"]

at org.apache.spark.storage.StorageUtils$.<init>(StorageUtils.scala:213)

at org.apache.spark.storage.StorageUtils$.<clinit>(StorageUtils.scala)

... 19 more

768

收起