【已解决】node3工作节点node "k8s-node3" not found

我这个问题和这两个问题类似,但是都没有具体的解决方法。

https://coding.imooc.com/learn/questiondetail/GzLgV6kWyw46kWxb.html

https://coding.imooc.com/learn/questiondetail/vQW1lPEpbKd6yE9A.html

今天从头到尾的又重新操作了一遍,问题依旧,麻烦老师帮忙看看,具体信息如下:

节点数是按照老师的视频进行配置,目前node1和node2没有问题,问题出现在node3节点上。

[root@k8s-node1 kubernetes]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-node2 Ready 9h v1.20.2

[root@k8s-node2 kubernetes]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-node2 Ready 9h v1.20.2

这里是node3节点的日志:

[root@k8s-node3 kubernetes]# journalctl -f -u kubelet

Oct 14 21:07:58 k8s-node3 systemd[1]: Started Kubernetes Kubelet.

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363231 18566 flags.go:59] FLAG: --add-dir-header="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363287 18566 flags.go:59] FLAG: --address=“0.0.0.0"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363293 18566 flags.go:59] FLAG: --allowed-unsafe-sysctls=”[]"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363301 18566 flags.go:59] FLAG: --alsologtostderr="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363304 18566 flags.go:59] FLAG: --anonymous-auth="true"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363309 18566 flags.go:59] FLAG: --application-metrics-count-limit="100"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363313 18566 flags.go:59] FLAG: --authentication-token-webhook="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363317 18566 flags.go:59] FLAG: --authentication-token-webhook-cache-ttl=“2m0s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363321 18566 flags.go:59] FLAG: --authorization-mode=“AlwaysAllow"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363325 18566 flags.go:59] FLAG: --authorization-webhook-cache-authorized-ttl=“5m0s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363328 18566 flags.go:59] FLAG: --authorization-webhook-cache-unauthorized-ttl=“30s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363331 18566 flags.go:59] FLAG: --azure-container-registry-config=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363334 18566 flags.go:59] FLAG: --boot-id-file=”/proc/sys/kernel/random/boot_id"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363338 18566 flags.go:59] FLAG: --bootstrap-kubeconfig=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363341 18566 flags.go:59] FLAG: --cert-dir=”/var/lib/kubelet/pki"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363345 18566 flags.go:59] FLAG: --cgroup-driver=“cgroupfs"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363348 18566 flags.go:59] FLAG: --cgroup-root=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363351 18566 flags.go:59] FLAG: --cgroups-per-qos=“true"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363354 18566 flags.go:59] FLAG: --chaos-chance=“0"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363360 18566 flags.go:59] FLAG: --client-ca-file=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363363 18566 flags.go:59] FLAG: --cloud-config=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363366 18566 flags.go:59] FLAG: --cloud-provider=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363369 18566 flags.go:59] FLAG: --cluster-dns=”[]“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363374 18566 flags.go:59] FLAG: --cluster-domain=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363376 18566 flags.go:59] FLAG: --cni-bin-dir=”/opt/cni/bin"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363380 18566 flags.go:59] FLAG: --cni-cache-dir=”/var/lib/cni/cache"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363383 18566 flags.go:59] FLAG: --cni-conf-dir=”/etc/cni/net.d"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363386 18566 flags.go:59] FLAG: --config=”/etc/kubernetes/kubelet-config.yaml"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363389 18566 flags.go:59] FLAG: --container-hints=”/etc/cadvisor/container_hints.json"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363393 18566 flags.go:59] FLAG: --container-log-max-files="5"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363397 18566 flags.go:59] FLAG: --container-log-max-size="10Mi"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363400 18566 flags.go:59] FLAG: --container-runtime="remote"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363403 18566 flags.go:59] FLAG: --container-runtime-endpoint=“unix:///var/run/containerd/containerd.sock"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363406 18566 flags.go:59] FLAG: --containerd=”/run/containerd/containerd.sock"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363409 18566 flags.go:59] FLAG: --containerd-namespace="k8s.io"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363414 18566 flags.go:59] FLAG: --contention-profiling="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363417 18566 flags.go:59] FLAG: --cpu-cfs-quota="true"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363420 18566 flags.go:59] FLAG: --cpu-cfs-quota-period="100ms"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363423 18566 flags.go:59] FLAG: --cpu-manager-policy="none"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363427 18566 flags.go:59] FLAG: --cpu-manager-reconcile-period="10s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363430 18566 flags.go:59] FLAG: --docker="unix:///var/run/docker.sock"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363433 18566 flags.go:59] FLAG: --docker-endpoint=“unix:///var/run/docker.sock"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363436 18566 flags.go:59] FLAG: --docker-env-metadata-whitelist=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363439 18566 flags.go:59] FLAG: --docker-only=“false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363442 18566 flags.go:59] FLAG: --docker-root=”/var/lib/docker"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363445 18566 flags.go:59] FLAG: --docker-tls="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363449 18566 flags.go:59] FLAG: --docker-tls-ca="ca.pem"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363451 18566 flags.go:59] FLAG: --docker-tls-cert="cert.pem"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363454 18566 flags.go:59] FLAG: --docker-tls-key=“key.pem"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363457 18566 flags.go:59] FLAG: --dynamic-config-dir=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363463 18566 flags.go:59] FLAG: --enable-cadvisor-json-endpoints="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363466 18566 flags.go:59] FLAG: --enable-controller-attach-detach="true"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363469 18566 flags.go:59] FLAG: --enable-debugging-handlers="true"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363472 18566 flags.go:59] FLAG: --enable-load-reader="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363474 18566 flags.go:59] FLAG: --enable-server=“true"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363478 18566 flags.go:59] FLAG: --enforce-node-allocatable=”[pods]"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363485 18566 flags.go:59] FLAG: --event-burst="10"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363488 18566 flags.go:59] FLAG: --event-qps="5"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363491 18566 flags.go:59] FLAG: --event-storage-age-limit="default=0"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363494 18566 flags.go:59] FLAG: --event-storage-event-limit="default=0"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363497 18566 flags.go:59] FLAG: --eviction-hard="imagefs.available<15%,memory.available<100Mi,nodefs.available<10%,nodefs.inodesFree<5%"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363507 18566 flags.go:59] FLAG: --eviction-max-pod-grace-period=“0"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363511 18566 flags.go:59] FLAG: --eviction-minimum-reclaim=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363516 18566 flags.go:59] FLAG: --eviction-pressure-transition-period=“5m0s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363519 18566 flags.go:59] FLAG: --eviction-soft=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363522 18566 flags.go:59] FLAG: --eviction-soft-grace-period=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363526 18566 flags.go:59] FLAG: --exit-on-lock-contention="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363529 18566 flags.go:59] FLAG: --experimental-allocatable-ignore-eviction=“false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363532 18566 flags.go:59] FLAG: --experimental-bootstrap-kubeconfig=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363535 18566 flags.go:59] FLAG: --experimental-check-node-capabilities-before-mount=“false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363538 18566 flags.go:59] FLAG: --experimental-dockershim-root-directory=”/var/lib/dockershim"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363541 18566 flags.go:59] FLAG: --experimental-kernel-memcg-notification="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363544 18566 flags.go:59] FLAG: --experimental-logging-sanitization=“false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363549 18566 flags.go:59] FLAG: --experimental-mounter-path=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363551 18566 flags.go:59] FLAG: --fail-swap-on=“true"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363555 18566 flags.go:59] FLAG: --feature-gates=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363559 18566 flags.go:59] FLAG: --file-check-frequency="20s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363562 18566 flags.go:59] FLAG: --global-housekeeping-interval="1m0s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363565 18566 flags.go:59] FLAG: --hairpin-mode="promiscuous-bridge"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363568 18566 flags.go:59] FLAG: --healthz-bind-address="127.0.0.1"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363571 18566 flags.go:59] FLAG: --healthz-port="10248"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363574 18566 flags.go:59] FLAG: --help=“false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363577 18566 flags.go:59] FLAG: --hostname-override=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363580 18566 flags.go:59] FLAG: --housekeeping-interval="10s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363583 18566 flags.go:59] FLAG: --http-check-frequency=“20s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363587 18566 flags.go:59] FLAG: --image-credential-provider-bin-dir=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363589 18566 flags.go:59] FLAG: --image-credential-provider-config=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363592 18566 flags.go:59] FLAG: --image-gc-high-threshold="85"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363595 18566 flags.go:59] FLAG: --image-gc-low-threshold="80"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363598 18566 flags.go:59] FLAG: --image-pull-progress-deadline=“2m0s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363601 18566 flags.go:59] FLAG: --image-service-endpoint=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363604 18566 flags.go:59] FLAG: --iptables-drop-bit="15"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363607 18566 flags.go:59] FLAG: --iptables-masquerade-bit="14"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363609 18566 flags.go:59] FLAG: --keep-terminated-pod-volumes="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363613 18566 flags.go:59] FLAG: --kernel-memcg-notification="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363615 18566 flags.go:59] FLAG: --kube-api-burst="10"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363618 18566 flags.go:59] FLAG: --kube-api-content-type=“application/vnd.kubernetes.protobuf"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363622 18566 flags.go:59] FLAG: --kube-api-qps=“5"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363625 18566 flags.go:59] FLAG: --kube-reserved=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363628 18566 flags.go:59] FLAG: --kube-reserved-cgroup=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363632 18566 flags.go:59] FLAG: --kubeconfig=”/etc/kubernetes/kubeconfig"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363635 18566 flags.go:59] FLAG: --kubelet-cgroups=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363638 18566 flags.go:59] FLAG: --lock-file=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363640 18566 flags.go:59] FLAG: --log-backtrace-at=”:0"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363644 18566 flags.go:59] FLAG: --log-cadvisor-usage=“false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363647 18566 flags.go:59] FLAG: --log-dir=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363650 18566 flags.go:59] FLAG: --log-file=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363653 18566 flags.go:59] FLAG: --log-file-max-size="1800"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363657 18566 flags.go:59] FLAG: --log-flush-frequency="5s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363660 18566 flags.go:59] FLAG: --logging-format="text"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363662 18566 flags.go:59] FLAG: --logtostderr=“true"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363666 18566 flags.go:59] FLAG: --machine-id-file=”/etc/machine-id,/var/lib/dbus/machine-id"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363671 18566 flags.go:59] FLAG: --make-iptables-util-chains=“true"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363674 18566 flags.go:59] FLAG: --manifest-url=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363677 18566 flags.go:59] FLAG: --manifest-url-header=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363682 18566 flags.go:59] FLAG: --master-service-namespace="default"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363685 18566 flags.go:59] FLAG: --max-open-files="1000000"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363689 18566 flags.go:59] FLAG: --max-pods=“110"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363692 18566 flags.go:59] FLAG: --maximum-dead-containers=”-1"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363695 18566 flags.go:59] FLAG: --maximum-dead-containers-per-container="1"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363698 18566 flags.go:59] FLAG: --minimum-container-ttl-duration="0s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363701 18566 flags.go:59] FLAG: --minimum-image-ttl-duration="2m0s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363704 18566 flags.go:59] FLAG: --network-plugin="cni"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363707 18566 flags.go:59] FLAG: --network-plugin-mtu="0"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363710 18566 flags.go:59] FLAG: --node-ip=“192.168.56.103"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363713 18566 flags.go:59] FLAG: --node-labels=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363719 18566 flags.go:59] FLAG: --node-status-max-images="50"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363722 18566 flags.go:59] FLAG: --node-status-update-frequency="10s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363724 18566 flags.go:59] FLAG: --non-masquerade-cidr=“10.0.0.0/8"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363728 18566 flags.go:59] FLAG: --one-output=“false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363731 18566 flags.go:59] FLAG: --oom-score-adj=”-999"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363734 18566 flags.go:59] FLAG: --pod-cidr=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363737 18566 flags.go:59] FLAG: --pod-infra-container-image=“k8s.gcr.io/pause:3.2"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363740 18566 flags.go:59] FLAG: --pod-manifest-path=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363743 18566 flags.go:59] FLAG: --pod-max-pids=”-1"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363746 18566 flags.go:59] FLAG: --pods-per-core="0"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363749 18566 flags.go:59] FLAG: --port="10250"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363752 18566 flags.go:59] FLAG: --protect-kernel-defaults=“false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363755 18566 flags.go:59] FLAG: --provider-id=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363757 18566 flags.go:59] FLAG: --qos-reserved=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363760 18566 flags.go:59] FLAG: --read-only-port="10255"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363763 18566 flags.go:59] FLAG: --really-crash-for-testing="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363767 18566 flags.go:59] FLAG: --redirect-container-streaming="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363769 18566 flags.go:59] FLAG: --register-node="true"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363772 18566 flags.go:59] FLAG: --register-schedulable=“true"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363776 18566 flags.go:59] FLAG: --register-with-taints=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363782 18566 flags.go:59] FLAG: --registry-burst=“10"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363785 18566 flags.go:59] FLAG: --registry-qps=“5"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363788 18566 flags.go:59] FLAG: --reserved-cpus=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363790 18566 flags.go:59] FLAG: --resolv-conf=”/etc/resolv.conf"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363794 18566 flags.go:59] FLAG: --root-dir=”/var/lib/kubelet"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363799 18566 flags.go:59] FLAG: --rotate-certificates="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363802 18566 flags.go:59] FLAG: --rotate-server-certificates="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363805 18566 flags.go:59] FLAG: --runonce=“false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363808 18566 flags.go:59] FLAG: --runtime-cgroups=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363810 18566 flags.go:59] FLAG: --runtime-request-timeout=“2m0s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363813 18566 flags.go:59] FLAG: --seccomp-profile-root=”/var/lib/kubelet/seccomp"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363816 18566 flags.go:59] FLAG: --serialize-image-pulls="true"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363820 18566 flags.go:59] FLAG: --skip-headers="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363823 18566 flags.go:59] FLAG: --skip-log-headers="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363826 18566 flags.go:59] FLAG: --stderrthreshold="2"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363829 18566 flags.go:59] FLAG: --storage-driver-buffer-duration="1m0s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363832 18566 flags.go:59] FLAG: --storage-driver-db="cadvisor"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363835 18566 flags.go:59] FLAG: --storage-driver-host="localhost:8086"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363838 18566 flags.go:59] FLAG: --storage-driver-password="root"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363841 18566 flags.go:59] FLAG: --storage-driver-secure="false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363844 18566 flags.go:59] FLAG: --storage-driver-table="stats"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363847 18566 flags.go:59] FLAG: --storage-driver-user="root"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363850 18566 flags.go:59] FLAG: --streaming-connection-idle-timeout="4h0m0s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363853 18566 flags.go:59] FLAG: --sync-frequency=“1m0s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363856 18566 flags.go:59] FLAG: --system-cgroups=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363859 18566 flags.go:59] FLAG: --system-reserved=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363862 18566 flags.go:59] FLAG: --system-reserved-cgroup=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363865 18566 flags.go:59] FLAG: --tls-cert-file=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363868 18566 flags.go:59] FLAG: --tls-cipher-suites=”[]“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363871 18566 flags.go:59] FLAG: --tls-min-version=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363874 18566 flags.go:59] FLAG: --tls-private-key-file=”"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363877 18566 flags.go:59] FLAG: --topology-manager-policy="none"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363880 18566 flags.go:59] FLAG: --topology-manager-scope="container"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363883 18566 flags.go:59] FLAG: --v="2"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363886 18566 flags.go:59] FLAG: --version=“false"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363892 18566 flags.go:59] FLAG: --vmodule=”“

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363895 18566 flags.go:59] FLAG: --volume-plugin-dir=”/usr/libexec/kubernetes/kubelet-plugins/volume/exec/"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363899 18566 flags.go:59] FLAG: --volume-stats-agg-period="1m0s"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.363962 18566 feature_gate.go:243] feature gates: &{map[]}

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.365894 18566 feature_gate.go:243] feature gates: &{map[]}

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.365979 18566 feature_gate.go:243] feature gates: &{map[]}

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.385910 18566 mount_linux.go:202] Detected OS with systemd

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.386043 18566 server.go:416] Version: v1.20.2

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.386113 18566 feature_gate.go:243] feature gates: &{map[]}

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.386206 18566 feature_gate.go:243] feature gates: &{map[]}

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.452173 18566 dynamic_cafile_content.go:129] Loaded a new CA Bundle and Verifier for "client-ca-bundle::/etc/kubernetes/ssl/ca.pem"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.452374 18566 manager.go:165] cAdvisor running in container: "/sys/fs/cgroup/cpu,cpuacct/system.slice/kubelet.service"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.452596 18566 dynamic_cafile_content.go:167] Starting client-ca-bundle::/etc/kubernetes/ssl/ca.pem

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.483298 18566 fs.go:127] Filesystem UUIDs: map[2021-04-28-16-51-58-26:/dev/sr0 8040b258-2862-4b13-9c1d-c1cc6742caed:/dev/sda1 97e78cf0-bf20-41cb-b527-ddabcb80ab3c:/dev/dm-1 9814c5f6-27d1-419f-a06f-6d512f8c02b3:/dev/dm-0]

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.483327 18566 fs.go:128] Filesystem partitions: map[/dev/mapper/centos-root:{mountpoint:/ major:253 minor:0 fsType:xfs blockSize:0} /dev/sda1:{mountpoint:/boot major:8 minor:1 fsType:xfs blockSize:0} /dev/shm:{mountpoint:/dev/shm major:0 minor:18 fsType:tmpfs blockSize:0} /run:{mountpoint:/run major:0 minor:19 fsType:tmpfs blockSize:0} /run/user/0:{mountpoint:/run/user/0 major:0 minor:40 fsType:tmpfs blockSize:0} /sys/fs/cgroup:{mountpoint:/sys/fs/cgroup major:0 minor:20 fsType:tmpfs blockSize:0}]

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.483569 18566 nvidia.go:61] NVIDIA setup failed: no NVIDIA devices found

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.484789 18566 manager.go:213] Machine: {Timestamp:2021-10-14 21:07:58.484722349 +0800 CST m=+0.216053465 NumCores:1 NumPhysicalCores:1 NumSockets:1 CpuFrequency:2303998 MemoryCapacity:1927241728 MemoryByType:map[] NVMInfo:{MemoryModeCapacity:0 AppDirectModeCapacity:0 AvgPowerBudget:0} HugePages:[{PageSize:2048 NumPages:0}] MachineID:633dcbc6d9f1284d818f073275e454f8 SystemUUID:A9FD3D78-1E40-7243-AD20-9FCC9EF52C66 BootID:16aa08e2-addc-4218-bd55-3a7fe62b72b4 Filesystems:[{Device:/dev/shm DeviceMajor:0 DeviceMinor:18 Capacity:963620864 Type:vfs Inodes:235259 HasInodes:true} {Device:/run DeviceMajor:0 DeviceMinor:19 Capacity:963620864 Type:vfs Inodes:235259 HasInodes:true} {Device:/sys/fs/cgroup DeviceMajor:0 DeviceMinor:20 Capacity:963620864 Type:vfs Inodes:235259 HasInodes:true} {Device:/dev/mapper/centos-root DeviceMajor:253 DeviceMinor:0 Capacity:30259806208 Type:vfs Inodes:14780416 HasInodes:true} {Device:/dev/sda1 DeviceMajor:8 DeviceMinor:1 Capacity:1063256064 Type:vfs Inodes:524288 HasInodes:true} {Device:/run/user/0 DeviceMajor:0 DeviceMinor:40 Capacity:192724992 Type:vfs Inodes:235259 HasInodes:true}] DiskMap:map[253:0:{Name:dm-0 Major:253 Minor:0 Size:30270291968 Scheduler:none} 253:1:{Name:dm-1 Major:253 Minor:1 Size:859832320 Scheduler:none} 8:0:{Name:sda Major:8 Minor:0 Size:32212254720 Scheduler:deadline}] NetworkDevices:[{Name:enp0s3 MacAddress:08:00:27:ee:a8:bd Speed:1000 Mtu:1500} {Name:enp0s8 MacAddress:08:00:27:53:87:43 Speed:1000 Mtu:1500} {Name:virbr0 MacAddress:52:54:00:94:f7:67 Speed:0 Mtu:1500} {Name:virbr0-nic MacAddress:52:54:00:94:f7:67 Speed:0 Mtu:1500}] Topology:[{Id:0 Memory:1927241728 HugePages:[{PageSize:2048 NumPages:0}] Cores:[{Id:0 Threads:[0] Caches:[{Size:32768 Type:Data Level:1} {Size:32768 Type:Instruction Level:1} {Size:262144 Type:Unified Level:2}] SocketID:0}] Caches:[{Size:16777216 Type:Unified Level:3}]}] CloudProvider:Unknown InstanceType:Unknown InstanceID:None}

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.484874 18566 manager_no_libpfm.go:28] cAdvisor is build without cgo and/or libpfm support. Perf event counters are not available.

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.488978 18566 manager.go:229] Version: {KernelVersion:3.10.0-1160.36.2.el7.x86_64 ContainerOsVersion:CentOS Linux 7 (Core) DockerVersion:18.03.1-ce DockerAPIVersion:1.37 CadvisorVersion: CadvisorRevision:}

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489086 18566 server.go:645] --cgroups-per-qos enabled, but --cgroup-root was not specified. defaulting to /

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489340 18566 container_manager_linux.go:274] container manager verified user specified cgroup-root exists: []

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489408 18566 container_manager_linux.go:279] Creating Container Manager object based on Node Config: {RuntimeCgroupsName: SystemCgroupsName: KubeletCgroupsName:/systemd/system.slice ContainerRuntime:remote CgroupsPerQOS:true CgroupRoot:/ CgroupDriver:cgroupfs KubeletRootDir:/var/lib/kubelet ProtectKernelDefaults:false NodeAllocatableConfig:{KubeReservedCgroupName: SystemReservedCgroupName: ReservedSystemCPUs: EnforceNodeAllocatable:map[pods:{}] KubeReserved:map[cpu:{i:{value:200 scale:-3} d:{Dec:} s:200m Format:DecimalSI} memory:{i:{value:512 scale:6} d:{Dec:} s:512M Format:DecimalSI}] SystemReserved:map[] HardEvictionThresholds:[{Signal:memory.available Operator:LessThan Value:{Quantity:100Mi Percentage:0} GracePeriod:0s MinReclaim:} {Signal:nodefs.available Operator:LessThan Value:{Quantity: Percentage:0.1} GracePeriod:0s MinReclaim:} {Signal:nodefs.inodesFree Operator:LessThan Value:{Quantity: Percentage:0.05} GracePeriod:0s MinReclaim:} {Signal:imagefs.available Operator:LessThan Value:{Quantity: Percentage:0.15} GracePeriod:0s MinReclaim:}]} QOSReserved:map[] ExperimentalCPUManagerPolicy:none ExperimentalTopologyManagerScope:container ExperimentalCPUManagerReconcilePeriod:10s ExperimentalPodPidsLimit:-1 EnforceCPULimits:true CPUCFSQuotaPeriod:100ms ExperimentalTopologyManagerPolicy:none}

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489489 18566 topology_manager.go:120] [topologymanager] Creating topology manager with none policy per container scope

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489496 18566 container_manager_linux.go:310] [topologymanager] Initializing Topology Manager with none policy and container-level scope

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489502 18566 container_manager_linux.go:315] Creating device plugin manager: true

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489514 18566 manager.go:133] Creating Device Plugin manager at /var/lib/kubelet/device-plugins/kubelet.sock

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489607 18566 remote_runtime.go:62] parsed scheme: ""

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489615 18566 remote_runtime.go:62] scheme “” not registered, fallback to default scheme

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489638 18566 passthrough.go:48] ccResolverWrapper: sending update to cc: {[{/var/run/containerd/containerd.sock 0 }] }

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489650 18566 clientconn.go:948] ClientConn switching balancer to "pick_first"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489686 18566 remote_image.go:50] parsed scheme: ""

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489691 18566 remote_image.go:50] scheme “” not registered, fallback to default scheme

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489700 18566 passthrough.go:48] ccResolverWrapper: sending update to cc: {[{/var/run/containerd/containerd.sock 0 }] }

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489704 18566 clientconn.go:948] ClientConn switching balancer to "pick_first"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489725 18566 server.go:1117] Using root directory: /var/lib/kubelet

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489741 18566 kubelet.go:262] Adding pod path: /etc/kubernetes/manifests

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489762 18566 file.go:68] Watching path "/etc/kubernetes/manifests"

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.489773 18566 kubelet.go:273] Watching apiserver

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.490031 18566 clientconn.go:897] blockingPicker: the picked transport is not ready, loop back to repick

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.490050 18566 reflector.go:219] Starting reflector *v1.Node (0s) from k8s.io/kubernetes/pkg/kubelet/kubelet.go:438

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.490313 18566 balancer_conn_wrappers.go:78] pickfirstBalancer: HandleSubConnStateChange: 0xc000c4bc60, {CONNECTING }

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.490425 18566 balancer_conn_wrappers.go:78] pickfirstBalancer: HandleSubConnStateChange: 0xc000c4be00, {CONNECTING }

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.508117 18566 reflector.go:219] Starting reflector *v1.Pod (0s) from k8s.io/kubernetes/pkg/kubelet/config/apiserver.go:46

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.508462 18566 reflector.go:219] Starting reflector *v1.Service (0s) from k8s.io/client-go/informers/factory.go:134

Oct 14 21:07:58 k8s-node3 kubelet[18566]: E1014 21:07:58.508802 18566 reflector.go:138] k8s.io/kubernetes/pkg/kubelet/kubelet.go:438: Failed to watch *v1.Node: failed to list *v1.Node: Get “https://127.0.0.1:6443/api/v1/nodes?fieldSelector=metadata.name%3Dk8s-node3&limit=500&resourceVersion=0”: dial tcp 127.0.0.1:6443: connect: connection refused

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.508850 18566 balancer_conn_wrappers.go:78] pickfirstBalancer: HandleSubConnStateChange: 0xc000c4bc60, {READY }

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.508930 18566 balancer_conn_wrappers.go:78] pickfirstBalancer: HandleSubConnStateChange: 0xc000c4be00, {READY }

Oct 14 21:07:58 k8s-node3 kubelet[18566]: E1014 21:07:58.509047 18566 reflector.go:138] k8s.io/client-go/informers/factory.go:134: Failed to watch *v1.Service: failed to list *v1.Service: Get “https://127.0.0.1:6443/api/v1/services?limit=500&resourceVersion=0”: dial tcp 127.0.0.1:6443: connect: connection refused

Oct 14 21:07:58 k8s-node3 kubelet[18566]: E1014 21:07:58.509105 18566 reflector.go:138] k8s.io/kubernetes/pkg/kubelet/config/apiserver.go:46: Failed to watch *v1.Pod: failed to list *v1.Pod: Get “https://127.0.0.1:6443/api/v1/pods?fieldSelector=spec.nodeName%3Dk8s-node3&limit=500&resourceVersion=0”: dial tcp 127.0.0.1:6443: connect: connection refused

Oct 14 21:07:58 k8s-node3 kubelet[18566]: I1014 21:07:58.514431 18566 kuberuntime_manager.go:216] Container runtime containerd initialized, version: v1.4.3, apiVersion: v1alpha2

Oct 14 21:07:59 k8s-node3 kubelet[18566]: E1014 21:07:59.360582 18566 reflector.go:138] k8s.io/kubernetes/pkg/kubelet/kubelet.go:438: Failed to watch *v1.Node: failed to list *v1.Node: Get “https://127.0.0.1:6443/api/v1/nodes?fieldSelector=metadata.name%3Dk8s-node3&limit=500&resourceVersion=0”: dial tcp 127.0.0.1:6443: connect: connection refused

Oct 14 21:07:59 k8s-node3 kubelet[18566]: E1014 21:07:59.569655 18566 reflector.go:138] k8s.io/client-go/informers/factory.go:134: Failed to watch *v1.Service: failed to list *v1.Service: Get “https://127.0.0.1:6443/api/v1/services?limit=500&resourceVersion=0”: dial tcp 127.0.0.1:6443: connect: connection refused

Oct 14 21:07:59 k8s-node3 kubelet[18566]: E1014 21:07:59.869623 18566 reflector.go:138] k8s.io/kubernetes/pkg/kubelet/config/apiserver.go:46: Failed to watch *v1.Pod: failed to list *v1.Pod: Get “https://127.0.0.1:6443/api/v1/pods?fieldSelector=spec.nodeName%3Dk8s-node3&limit=500&resourceVersion=0”: dial tcp 127.0.0.1:6443: connect: connection refused

Oct 14 21:08:01 k8s-node3 kubelet[18566]: E1014 21:08:01.895826 18566 reflector.go:138] k8s.io/kubernetes/pkg/kubelet/kubelet.go:438: Failed to watch *v1.Node: failed to list *v1.Node: Get “https://127.0.0.1:6443/api/v1/nodes?fieldSelector=metadata.name%3Dk8s-node3&limit=500&resourceVersion=0”: dial tcp 127.0.0.1:6443: connect: connection refused

Oct 14 21:08:02 k8s-node3 kubelet[18566]: E1014 21:08:02.395189 18566 reflector.go:138] k8s.io/client-go/informers/factory.go:134: Failed to watch *v1.Service: failed to list *v1.Service: Get “https://127.0.0.1:6443/api/v1/services?limit=500&resourceVersion=0”: dial tcp 127.0.0.1:6443: connect: connection refused

Oct 14 21:08:02 k8s-node3 kubelet[18566]: E1014 21:08:02.708392 18566 reflector.go:138] k8s.io/kubernetes/pkg/kubelet/config/apiserver.go:46: Failed to watch *v1.Pod: failed to list *v1.Pod: Get “https://127.0.0.1:6443/api/v1/pods?fieldSelector=spec.nodeName%3Dk8s-node3&limit=500&resourceVersion=0”: dial tcp 127.0.0.1:6443: connect: connection refused

Oct 14 21:08:04 k8s-node3 kubelet[18566]: E1014 21:08:04.741906 18566 aws_credentials.go:77] while getting AWS credentials NoCredentialProviders: no valid providers in chain. Deprecated.

Oct 14 21:08:04 k8s-node3 kubelet[18566]: For verbose messaging see aws.Config.CredentialsChainVerboseErrors

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.742065 18566 kuberuntime_manager.go:1006] updating runtime config through cri with podcidr 10.200.0.0/16

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.743120 18566 kubelet_network.go:77] Setting Pod CIDR: -> 10.200.0.0/16

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.743252 18566 plugins.go:635] Loaded volume plugin "kubernetes.io/gce-pd"

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.743262 18566 plugins.go:635] Loaded volume plugin "kubernetes.io/cinder"

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.743268 18566 plugins.go:635] Loaded volume plugin "kubernetes.io/azure-disk"

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.743274 18566 plugins.go:635] Loaded volume plugin "kubernetes.io/azure-file"

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.743281 18566 plugins.go:635] Loaded volume plugin "kubernetes.io/vsphere-volume"

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.743286 18566 plugins.go:635] Loaded volume plugin "kubernetes.io/aws-ebs"

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.743293 18566 plugins.go:635] Loaded volume plugin "kubernetes.io/empty-dir"

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.743300 18566 plugins.go:635] Loaded volume plugin "kubernetes.io/git-repo"

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.743309 18566 plugins.go:635] Loaded volume plugin "kubernetes.io/host-path"

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.743316 18566 plugins.go:635] Loaded volume plugin "kubernetes.io/nfs"

Oct 14 21:08:04 k8s-node3 kubelet[18566]: E1014 21:08:04.852308 18566 eviction_manager.go:260] eviction manager: failed to get summary stats: failed to get node info: node “k8s-node3” not found

Oct 14 21:08:04 k8s-node3 kubelet[18566]: E1014 21:08:04.853851 18566 kubelet.go:2243] node “k8s-node3” not found

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.853872 18566 kubelet_node_status.go:339] Setting node annotation to enable volume controller attach/detach

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.854042 18566 setters.go:86] Using node IP: "192.168.56.103"

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.856728 18566 kubelet_node_status.go:531] Recording NodeHasSufficientMemory event message for node k8s-node3

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.856752 18566 kubelet_node_status.go:531] Recording NodeHasNoDiskPressure event message for node k8s-node3

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.856760 18566 kubelet_node_status.go:531] Recording NodeHasSufficientPID event message for node k8s-node3

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.856778 18566 kubelet_node_status.go:71] Attempting to register node k8s-node3

Oct 14 21:08:04 k8s-node3 kubelet[18566]: E1014 21:08:04.857083 18566 kubelet_node_status.go:93] Unable to register node “k8s-node3” with API server: Post “https://127.0.0.1:6443/api/v1/nodes”: dial tcp 127.0.0.1:6443: connect: connection refused

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.866338 18566 kubelet.go:1888] SyncLoop (ADD, “file”): "nginx-proxy-k8s-node3_kube-system(7cf27d45a874e9a25860392cd83c3e6a)"

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.866378 18566 topology_manager.go:187] [topologymanager] Topology Admit Handler

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.866423 18566 kubelet_node_status.go:339] Setting node annotation to enable volume controller attach/detach

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.866586 18566 setters.go:86] Using node IP: "192.168.56.103"

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.868942 18566 kubelet_node_status.go:531] Recording NodeHasSufficientMemory event message for node k8s-node3

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.868958 18566 kubelet_node_status.go:531] Recording NodeHasNoDiskPressure event message for node k8s-node3

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.868967 18566 kubelet_node_status.go:531] Recording NodeHasSufficientPID event message for node k8s-node3

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.869937 18566 kubelet_node_status.go:339] Setting node annotation to enable volume controller attach/detach

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.870121 18566 setters.go:86] Using node IP: "192.168.56.103"

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.881784 18566 kubelet_node_status.go:531] Recording NodeHasSufficientMemory event message for node k8s-node3

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.881806 18566 kubelet_node_status.go:531] Recording NodeHasNoDiskPressure event message for node k8s-node3

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.881813 18566 kubelet_node_status.go:531] Recording NodeHasSufficientPID event message for node k8s-node3

Oct 14 21:08:04 k8s-node3 kubelet[18566]: W1014 21:08:04.886635 18566 status_manager.go:550] Failed to get status for pod “nginx-proxy-k8s-node3_kube-system(7cf27d45a874e9a25860392cd83c3e6a)”: Get “https://127.0.0.1:6443/api/v1/namespaces/kube-system/pods/nginx-proxy-k8s-node3”: dial tcp 127.0.0.1:6443: connect: connection refused

Oct 14 21:08:04 k8s-node3 kubelet[18566]: I1014 21:08:04.954350 18566 reconciler.go:224] operationExecutor.VerifyControllerAttachedVolume started for volume “etc-nginx” (UniqueName: “kubernetes.io/host-path/7cf27d45a874e9a25860392cd83c3e6a-etc-nginx”) pod “nginx-proxy-k8s-node3” (UID: “7cf27d45a874e9a25860392cd83c3e6a”)

Oct 14 21:08:04 k8s-node3 kubelet[18566]: E1014 21:08:04.954421 18566 kubelet.go:2243] node “k8s-node3” not found

Oct 14 21:08:04 k8s-node3 kubelet[18566]: E1014 21:08:04.969801 18566 controller.go:144] failed to ensure lease exists, will retry in 400ms, error: Get “https://127.0.0.1:6443/apis/coordination.k8s.io/v1/namespaces/kube-node-lease/leases/k8s-node3?timeout=10s”: dial tcp 127.0.0.1:6443: connect: connection refused

Oct 14 21:08:05 k8s-node3 kubelet[18566]: E1014 21:08:05.054516 18566 kubelet.go:2243] node “k8s-node3” not found

Oct 14 21:08:05 k8s-node3 kubelet[18566]: I1014 21:08:05.054582 18566 reconciler.go:269] operationExecutor.MountVolume started for volume “etc-nginx” (UniqueName: “kubernetes.io/host-path/7cf27d45a874e9a25860392cd83c3e6a-etc-nginx”) pod “nginx-proxy-k8s-node3” (UID: “7cf27d45a874e9a25860392cd83c3e6a”)

Oct 14 21:08:05 k8s-node3 kubelet[18566]: I1014 21:08:05.054629 18566 operation_generator.go:672] MountVolume.SetUp succeeded for volume “etc-nginx” (UniqueName: “kubernetes.io/host-path/7cf27d45a874e9a25860392cd83c3e6a-etc-nginx”) pod “nginx-proxy-k8s-node3” (UID: “7cf27d45a874e9a25860392cd83c3e6a”)

Oct 14 21:08:05 k8s-node3 kubelet[18566]: I1014 21:08:05.057216 18566 kubelet_node_status.go:339] Setting node annotation to enable volume controller attach/detach

Oct 14 21:08:05 k8s-node3 kubelet[18566]: I1014 21:08:05.057385 18566 setters.go:86] Using node IP: "192.168.56.103"

Oct 14 21:08:05 k8s-node3 kubelet[18566]: I1014 21:08:05.059065 18566 kubelet_node_status.go:531] Recording NodeHasSufficientMemory event message for node k8s-node3

Oct 14 21:08:05 k8s-node3 kubelet[18566]: I1014 21:08:05.059080 18566 kubelet_node_status.go:531] Recording NodeHasNoDiskPressure event message for node k8s-node3

Oct 14 21:08:05 k8s-node3 kubelet[18566]: I1014 21:08:05.059086 18566 kubelet_node_status.go:531] Recording NodeHasSufficientPID event message for node k8s-node3

Oct 14 21:08:05 k8s-node3 kubelet[18566]: I1014 21:08:05.059102 18566 kubelet_node_status.go:71] Attempting to register node k8s-node3

Oct 14 21:08:05 k8s-node3 kubelet[18566]: E1014 21:08:05.059289 18566 kubelet_node_status.go:93] Unable to register node “k8s-node3” with API server: Post “https://127.0.0.1:6443/api/v1/nodes”: dial tcp 127.0.0.1:6443: connect: connection refused

Oct 14 21:08:05 k8s-node3 kubelet[18566]: E1014 21:08:05.154664 18566 kubelet.go:2243] node “k8s-node3” not found

Oct 14 21:08:05 k8s-node3 kubelet[18566]: I1014 21:08:05.186643 18566 kuberuntime_manager.go:439] No sandbox for pod “nginx-proxy-k8s-node3_kube-system(7cf27d45a874e9a25860392cd83c3e6a)” can be found. Need to start a new one

Oct 14 21:08:05 k8s-node3 kubelet[18566]: E1014 21:08:05.230752 18566 remote_runtime.go:116] RunPodSandbox from runtime service failed: rpc error: code = Unknown desc = failed to create containerd task: OCI runtime create failed: expected cgroupsPath to be of format “slice:prefix:name” for systemd cgroups, got “/kubepods/burstable/pod7cf27d45a874e9a25860392cd83c3e6a/48876d27585fd21cd07fce7641bd06d6a2b7c3bebcf576d750e4ce458289af3c” instead: unknown

Oct 14 21:08:05 k8s-node3 kubelet[18566]: E1014 21:08:05.230800 18566 kuberuntime_sandbox.go:70] CreatePodSandbox for pod “nginx-proxy-k8s-node3_kube-system(7cf27d45a874e9a25860392cd83c3e6a)” failed: rpc error: code = Unknown desc = failed to create containerd task: OCI runtime create failed: expected cgroupsPath to be of format “slice:prefix:name” for systemd cgroups, got “/kubepods/burstable/pod7cf27d45a874e9a25860392cd83c3e6a/48876d27585fd21cd07fce7641bd06d6a2b7c3bebcf576d750e4ce458289af3c” instead: unknown

Oct 14 21:08:05 k8s-node3 kubelet[18566]: E1014 21:08:05.230815 18566 kuberuntime_manager.go:755] createPodSandbox for pod “nginx-proxy-k8s-node3_kube-system(7cf27d45a874e9a25860392cd83c3e6a)” failed: rpc error: code = Unknown desc = failed to create containerd task: OCI runtime create failed: expected cgroupsPath to be of format “slice:prefix:name” for systemd cgroups, got “/kubepods/burstable/pod7cf27d45a874e9a25860392cd83c3e6a/48876d27585fd21cd07fce7641bd06d6a2b7c3bebcf576d750e4ce458289af3c” instead: unknown

Oct 14 21:08:05 k8s-node3 kubelet[18566]: E1014 21:08:05.230852 18566 pod_workers.go:191] Error syncing pod 7cf27d45a874e9a25860392cd83c3e6a (“nginx-proxy-k8s-node3_kube-system(7cf27d45a874e9a25860392cd83c3e6a)”), skipping: failed to “CreatePodSandbox” for “nginx-proxy-k8s-node3_kube-system(7cf27d45a874e9a25860392cd83c3e6a)” with CreatePodSandboxError: "CreatePodSandbox for pod “nginx-proxy-k8s-node3_kube-system(7cf27d45a874e9a25860392cd83c3e6a)” failed: rpc error: code = Unknown desc = failed to create containerd task: OCI runtime create failed: expected cgroupsPath to be of format “slice:prefix:name” for systemd cgroups, got “/kubepods/burstable/pod7cf27d45a874e9a25860392cd83c3e6a/48876d27585fd21cd07fce7641bd06d6a2b7c3bebcf576d750e4ce458289af3c” instead: unknown"

Oct 14 21:08:05 k8s-node3 kubelet[18566]: E1014 21:08:05.254773 18566 kubelet.go:2243] node “k8s-node3” not found

Oct 14 21:08:05 k8s-node3 kubelet[18566]: E1014 21:08:05.260770 18566 reflector.go:138] k8s.io/kubernetes/pkg/kubelet/kubelet.go:438: Failed to watch *v1.Node: failed to list *v1.Node: Get “https://127.0.0.1:6443/api/v1/nodes?fieldSelector=metadata.name%3Dk8s-node3&limit=500&resourceVersion=0”: dial tcp 127.0.0.1:6443: connect: connection refused

Oct 14 21:08:05 k8s-node3 kubelet[18566]: E1014 21:08:05.355538 18566 kubelet.go:2243] node “k8s-node3” not found

Oct 14 21:08:05 k8s-node3 kubelet[18566]: E1014 21:08:05.370116 18566 controller.go:144] failed to ensure lease exists, will retry in 800ms, error: Get “https://127.0.0.1:6443/apis/coordination.k8s.io/v1/namespaces/kube-node-lease/leases/k8s-node3?timeout=10s”: dial tcp 127.0.0.1:6443: connect: connection refused

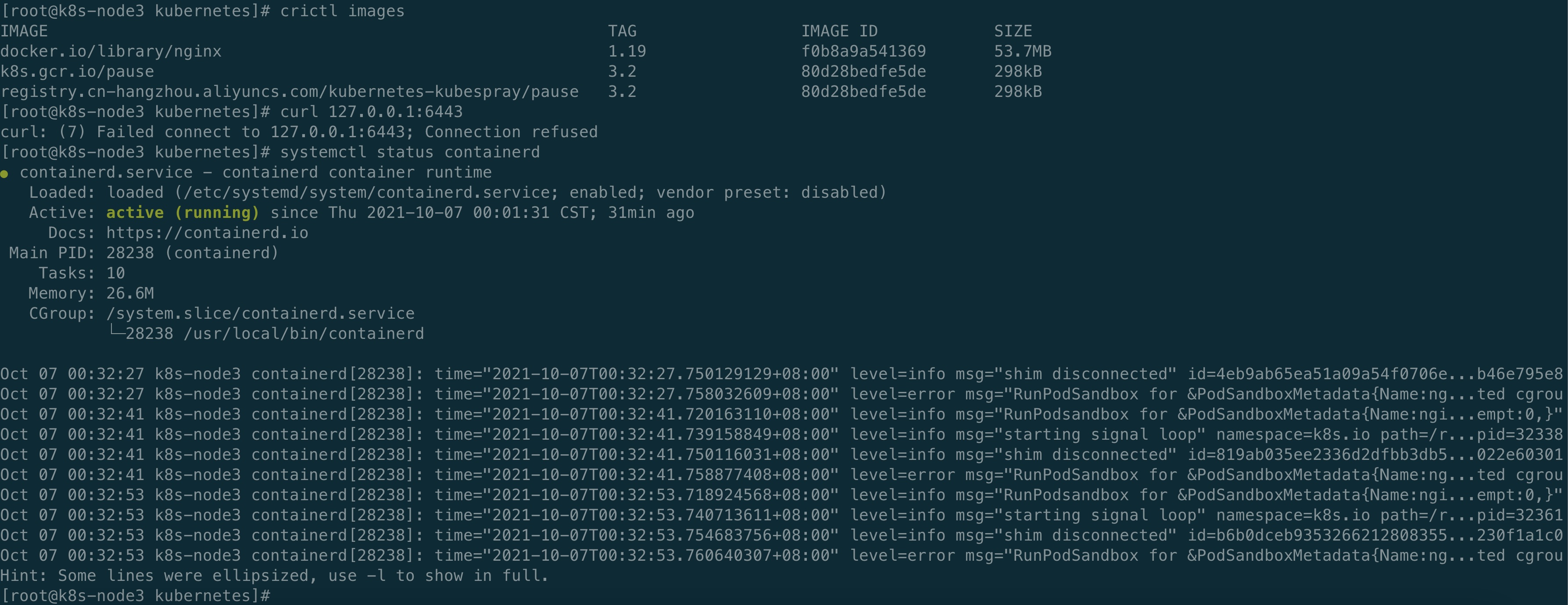

提前下载了如下镜像:

[root@k8s-node3 kubernetes]# crictl images

IMAGE TAG IMAGE ID SIZE

docker.io/library/nginx 1.19 f0b8a9a541369 53.7MB

k8s.gcr.io/pause 3.2 80d28bedfe5de 298kB

registry.cn-hangzhou.aliyuncs.com/kubernetes-kubespray/pause 3.2 80d28bedfe5de 298kB

尝试连接:

[root@k8s-node3 kubernetes]# curl 127.0.0.1:6443

curl: (7) Failed connect to 127.0.0.1:6443; Connection refused

nginx配置文件:

[root@k8s-node3 kubernetes]# cat /etc/nginx/nginx.conf

error_log stderr notice;

worker_processes 2;

worker_rlimit_nofile 130048;

worker_shutdown_timeout 10s;

events {

multi_accept on;

use epoll;

worker_connections 16384;

}

stream {

upstream kube_apiserver {

least_conn;

server 192.168.56.101:6443;

server 192.168.56.102:6443;

}

server {

listen 127.0.0.1:6443;

proxy_pass kube_apiserver;

proxy_timeout 10m;

proxy_connect_timeout 1s;

}

}

http {

aio threads;

aio_write on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 5m;

keepalive_requests 100;

reset_timedout_connection on;

server_tokens off;

autoindex off;

server {

listen 8081;

location /healthz {

access_log off;

return 200;

}

location /stub_status {

stub_status on;

access_log off;

}

}

}

nginx manifest文件:

[root@k8s-node3 kubernetes]# cat /etc/kubernetes/manifests/nginx-proxy.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-proxy

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: Reconcile

k8s-app: kube-nginx

spec:

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-node-critical

containers:

- name: nginx-proxy

image: docker.io/library/nginx:1.19

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: 25m

memory: 32M

securityContext:

privileged: true

livenessProbe:

httpGet:

path: /healthz

port: 8081

readinessProbe:

httpGet:

path: /healthz

port: 8081

volumeMounts:- mountPath: /etc/nginx

name: etc-nginx

readOnly: true

volumes:

- mountPath: /etc/nginx

- name: etc-nginx

hostPath:

path: /etc/nginx

各个组件的监听端口:

[root@k8s-node3 kubernetes]# netstat -ntlp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 192.168.122.1:53 0.0.0.0:* LISTEN 1560/dnsmasq

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1104/sshd

tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 1105/cupsd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1662/master

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 386/kubelet

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 393/kube-proxy

tcp 0 0 192.168.56.103:10250 0.0.0.0:* LISTEN 386/kubelet

tcp 0 0 192.168.56.103:2379 0.0.0.0:* LISTEN 757/etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 757/etcd

tcp 0 0 192.168.56.103:2380 0.0.0.0:* LISTEN 757/etcd

tcp 0 0 127.0.0.1:37550 0.0.0.0:* LISTEN 28238/containerd

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 729/rpcbind

tcp6 0 0 :::22 :::* LISTEN 1104/sshd

tcp6 0 0 ::1:631 :::* LISTEN 1105/cupsd

tcp6 0 0 :::111 :::* LISTEN 729/rpcbind

tcp6 0 0 :::10256 :::* LISTEN 393/kube-proxy

查看了其他帖子两个发现都是添加老师微信解决的,不知道他们是否是相同问题,还请老师帮忙解决,谢谢老师~