关于经典模式的疑问

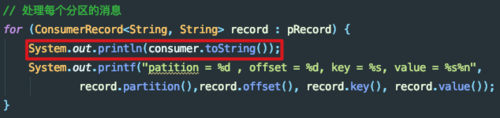

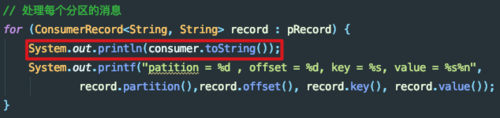

老师您好,经典模式是创建多个消费者对象来进行消费,我对多线程这块了解不多,就打印了consumer的对象,但我发现所有的消息都是被同一个对象给消费掉了,好像没有创建多个对象来消费呀

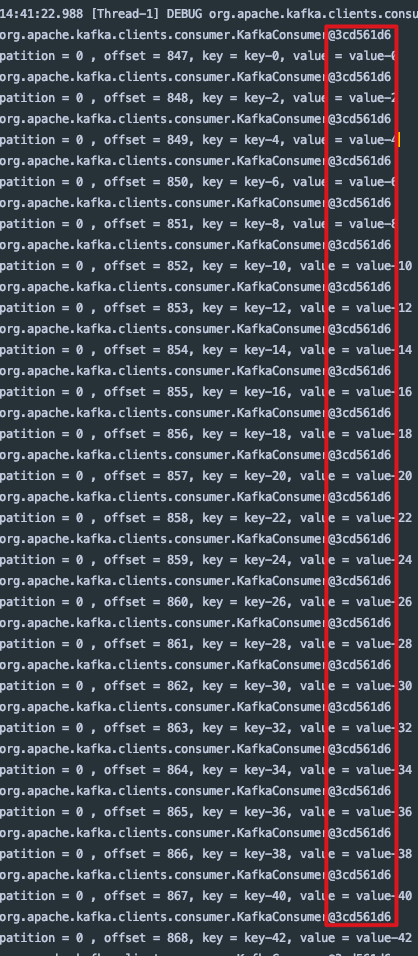

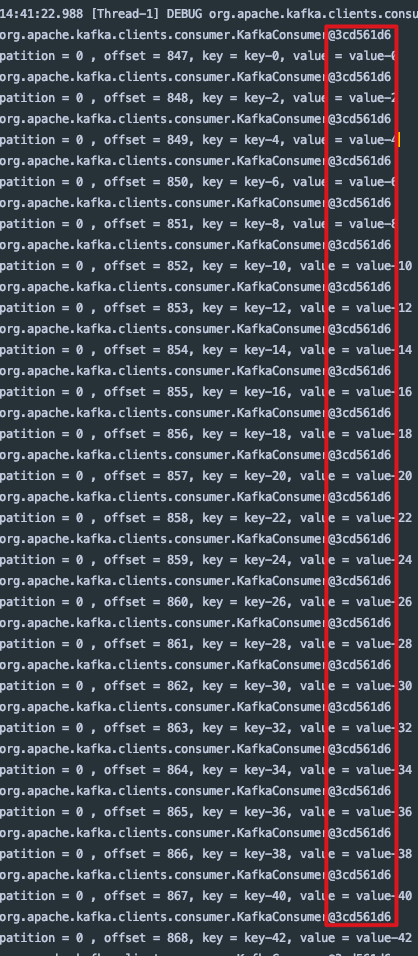

截图只是一部分,其实全都是同一个对象

2400

收起

老师您好,经典模式是创建多个消费者对象来进行消费,我对多线程这块了解不多,就打印了consumer的对象,但我发现所有的消息都是被同一个对象给消费掉了,好像没有创建多个对象来消费呀

截图只是一部分,其实全都是同一个对象