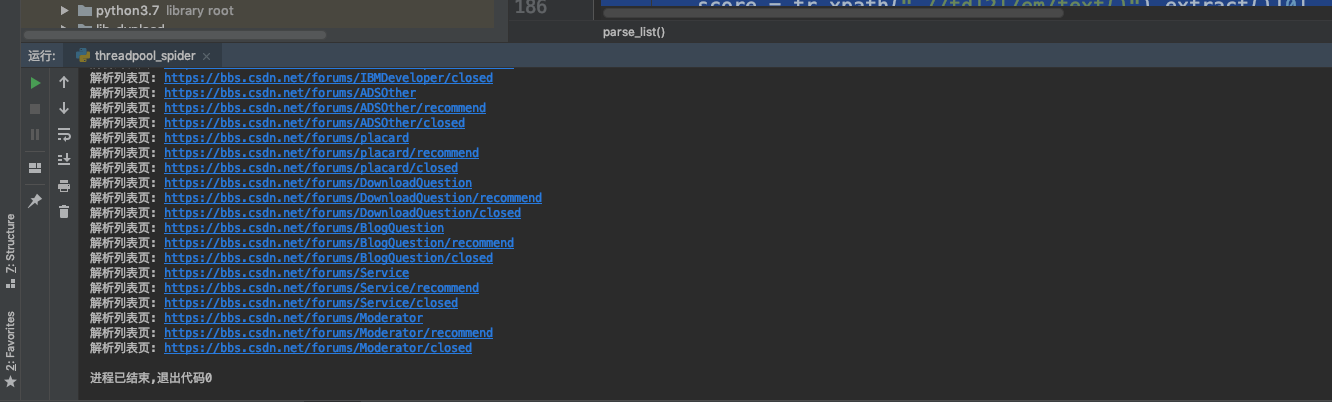

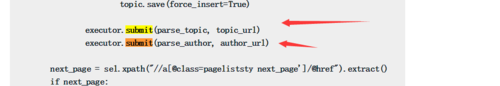

线程池执行完prase_list之后就退出了,不会执行prase_topic和prase_author

# -*- coding: utf-8 -*-

# @Time : 2019/6/8 17:37

# @Author : imooc

# @File : threadpool_spider.py

# @Software: PyCharm

import re

import ast

from urllib import parse

from datetime import datetime

import requests

from scrapy import Selector

from csdn_spider.models import *

domain = "https://bbs.csdn.net"

url_list = []

def get_nodes_json():

# 获取节点列表

left_menu_text = requests.get(domain + "/dynamic_js/left_menu.js?csdn").text

nodes_str_match = re.search("forumNodes: (.*])", left_menu_text)

if nodes_str_match:

nodes_str = nodes_str_match.group(1).replace("null", "None")

nodes_list = ast.literal_eval(nodes_str)

return nodes_list

return []

def process_nodes_list(nodes_list):

# 提取出所有的url到urls_list

for item in nodes_list:

if "url" in item and item["url"]:

url_list.append(item["url"])

if "children" in item:

process_nodes_list(item["children"])

def get_level1_urls(nodes_list):

# 提取第一层的url

level1_urls = []

for item in nodes_list:

if "url" in item and item["url"]:

level1_urls.append(item["url"])

return level1_urls

def get_last_urls():

# 剔除第一层的url,得到最终需要的url

nodes_list = get_nodes_json()

process_nodes_list(nodes_list)

level1_urls = get_level1_urls(nodes_list)

last_url = []

for url in url_list:

if url not in level1_urls:

last_url.append(url)

all_urls = []

for url in last_url:

all_urls.append(parse.urljoin(domain, url))

all_urls.append(parse.urljoin(domain, url + "/recommend"))

all_urls.append(parse.urljoin(domain, url + "/closed"))

return all_urls

def parse_topic(topic_url):

# 获取帖子详情及回复

print("解析帖子详情页: {}".format(topic_url))

topic_id = topic_url.split('/')[-1]

res_text = requests.get(topic_url).text

sel = Selector(text=res_text)

all_divs = sel.xpath("//div[starts-with(@id,'post-')]")

topic_item = all_divs[0]

content = topic_item.xpath(".//div[@class='post_body post_body_min_h']").extract()[0]

praised_nums = topic_item.xpath(".//label[@class='red_praise digg']//em/text()").extract()[0]

jtl_str = topic_item.xpath(".//div[@class='close_topic']/text()").extract()[0]

jtl = 0

# 匹配百分数

jtl_match = re.search('(100|[1-9]?d(.dd?d?)?)%$|0$', jtl_str, re.M)

if jtl_match:

jtl = float(jtl_match.group(1))

existed_topics = Topic.select().where(Topic.id == topic_id)

if existed_topics:

topic = existed_topics[0]

topic.content = content

topic.praised_nums = int(praised_nums)

topic.jtl = jtl

topic.save()

for answer_item in all_divs[1:]:

author_info = answer_item.xpath(".//div[@class='nick_name']//a[1]/@href").extract()[0]

author_id = author_info.split("/")[-1]

create_time_str = answer_item.xpath(".//label[@class='date_time']/text()").extract()[0]

create_time = datetime.strptime(create_time_str, "%Y-%m-%d %H:%M:%S")

answer_content = answer_item.xpath(".//div[@class='post_body post_body_min_h']").extract()[0]

answer_praised_nums = answer_item.xpath(".//label[@class='red_praise digg']//em/text()").extract()[0]

answer_id = answer_item.xpath(".//@data-post-id").extract()[0]

answer = Answer()

answer.id = int(answer_id)

answer.topic_id = topic_id

answer.author = author_id

answer.create_time = create_time

answer.praised_nums = int(answer_praised_nums)

answer.content = answer_content

existed_answer = Answer.select().where(Answer.id == answer_id)

if existed_answer:

answer.save()

else:

answer.save(force_insert=True)

next_page = sel.xpath("//a[@class='pageliststy next_page']/@href").extract()

if next_page:

next_url = parse.urljoin(domain, next_page[0])

executor.submit(parse_topic, next_url)

def parse_author(url):

author_id = url.split("/")[-1]

# 获取用户的详情

print("解析用户的详情页: {}".format(url))

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:65.0) Gecko/20100101 Firefox/65.0',

}

res_text = requests.get(url, headers=headers).text

sel = Selector(text=res_text)

author = Author()

author.id = author_id

all_li_strs = sel.xpath("//ul[@class='mod_my_t clearfix']/li/span/text()").extract()

click_nums = all_li_strs[0]

original_nums = all_li_strs[1]

forward_nums = int(all_li_strs[2])

rate = int(all_li_strs[3])

answer_nums = int(all_li_strs[4])

praised_nums = int(all_li_strs[5])

author.click_nums = click_nums

author.original_nums = original_nums

author.forward_nums = forward_nums

author.rate = rate

author.answer_nums = answer_nums

author.praised_nums = praised_nums

desc = sel.xpath("//dd[@class='user_desc']/text()").extract()

if desc:

author.desc = desc[0].strip()

person_b = sel.xpath("//dd[@class='person_b']/ul/li")

for item in person_b:

item_text = "".join(item.extract())

if "csdnc-m-add" in item_text:

location = item.xpath(".//span/text()").extract()[0].strip()

author.location = location

else:

industry = item.xpath(".//span/text()").extract()[0].strip()

author.industry = industry

name = sel.xpath("//h4[@class='username']/text()").extract()[0]

author.name = name.strip()

existed_author = Author.select().where(Author.id == author_id)

if existed_author:

author.save()

else:

author.save(force_insert=True)

def parse_list(url):

# 提取列表页数据

print("解析列表页: {}".format(url))

res_text = requests.get(url).text

sel = Selector(text=res_text)

all_trs = sel.xpath("//table[@class='forums_tab_table']//tbody//tr")

for tr in all_trs:

status = tr.xpath(".//td[1]/span/text()").extract()[0]

score = tr.xpath(".//td[2]/em/text()").extract()[0]

topic_url = parse.urljoin(domain, tr.xpath(".//td[3]/a[contains(@class,'forums_title')]/@href").extract()[0])

topic_id = int(topic_url.split("/")[-1])

topic_title = tr.xpath(".//td[3]/a[contains(@class,'forums_title')]/text()").extract()[0]

author_url = parse.urljoin(domain, tr.xpath(".//td[4]/a/@href").extract()[0])

author_id = author_url.split("/")[-1]

create_time_str = tr.xpath(".//td[4]/em/text()").extract()[0]

create_time = datetime.strptime(create_time_str, "%Y-%m-%d %H:%M")

answer_info = tr.xpath(".//td[5]/span/text()").extract()[0]

answer_nums = int(answer_info.split("/")[0])

click_nums = int(answer_info.split("/")[1])

last_time_str = tr.xpath(".//td[6]/em/text()").extract()[0]

last_time = datetime.strptime(last_time_str, "%Y-%m-%d %H:%M")

topic = Topic()

topic.id = topic_id

topic.title = topic_title

topic.author = author_id

topic.click_nums = click_nums

topic.answer_nums = answer_nums

topic.create_time = create_time

topic.last_answer_time = last_time

topic.score = score

topic.status = status

existed_topic = Topic.select().where(Topic.id == topic.id)

if existed_topic:

topic.save()

else:

topic.save(force_insert=True)

executor.submit(parse_topic, topic_url)

executor.submit(parse_author, author_url)

next_page = sel.xpath("//a[@class=pageliststy next_page']/@href").extract()

if next_page:

next_url = parse.urljoin(domain, next_page[0])

executor.submit(parse_list, next_url)

if __name__ == "__main__":

from concurrent.futures import ThreadPoolExecutor

executor = ThreadPoolExecutor(max_workers=10)

last_urls = get_last_urls()

for url in last_urls:

executor.submit(parse_list, url)

Topic表一共生成了178条数据

668

收起

你有没有试过在这里打断点 看看能不能运行到这行代码? 如果可以的话 在parse_detail这些函数中也打断点看看能否进入

你有没有试过在这里打断点 看看能不能运行到这行代码? 如果可以的话 在parse_detail这些函数中也打断点看看能否进入