在k8s中部署服务,pod间通信出现问题

我的yaml文件是这样写的,docker版本是19.03.10,k8s版本是1.19.3,用的是calico提供网络。

apiVersion: apps/v1

kind: Deployment

metadata:

name: amf

spec:

selector:

matchLabels:

app: amf

replicas: 1

template:

metadata:

labels:

app: amf

annotations:

cni.projectcalico.org/ipAddrs: "[\"10.100.200.132\"]"

spec:

nodeSelector:

type: k8smec

containers:

- image: amf-k8s:v3

imagePullPolicy: Never

name: amf

securityContext:

privileged: true

command: ["/bin/bash"]

args: ["-c", "/openair-amf/bin/oai_amf -c /openair-amf/etc/amf.conf -o"]

每一个微服务我都写了这样的yaml文件,apply之后微服务有部分不能正常运行,查看pod中运行日志看到:

what(): Cannot resolve a DNS name resolve: Host not found (authoritative)

此时pod中/etc/resolv.conf默认为:

nameserver 10.96.0.10

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

*但是我在yaml文件中加了修改 /etc/resolv.conf的部分,微服务就可以正常运行了,我不太理解为什么要这样。192.168.10.是我本机的网段。

volumeMounts:

- mountPath: /etc/resolv.conf

name: amf-volume

volumes:

- name: amf-volume

hostPath:

path: /home/k8s-mec/resolv.conf

文件的内容是:

nameserver 192.168.10.1

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

解决完上面的问题后,虽然都能running了,且我的镜像都是正确的,但是微服务放到k8s中之后,pod间不能正常通信,就是某一个微服务不能注册到另一个微服务,获取不到响应,我不知道是微服务迁移进k8s的哪里的配置出了问题。

[2022-05-09T10:35:25.789748] [spgwu] [spgwu_app] [info ] Send NF Instance Registration to NRF

[2022-05-09T10:35:25.890115] [spgwu] [spgwu_app] [warn ] Could not get response from NRF

更新我的一些测试:

这些都是正常的

sudo kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-659bd7879c-46n8c 1/1 Running 0 9d

calico-node-44vcq 1/1 Running 0 9d

calico-node-sz6vf 1/1 Running 0 9d

calico-node-vq8mh 1/1 Running 0 9d

coredns-f9fd979d6-6kcxk 1/1 Running 0 96m

coredns-f9fd979d6-9rzbl 1/1 Running 0 96m

etcd-k8smec 1/1 Running 1 9d

kube-apiserver-k8smec 1/1 Running 0 8d

kube-controller-manager-k8smec 1/1 Running 6 9d

kube-proxy-bwl58 1/1 Running 1 9d

kube-proxy-fwpck 1/1 Running 1 9d

kube-proxy-n7s5v 1/1 Running 1 9d

kube-scheduler-k8smec 1/1 Running 6 9d

metrics-server-v0.5.1-544f94d7bf-4m6w4 2/2 Running 3 8d

一些k8s的配置文件:

sudo cat /var/lib/kubelet/config.yaml

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

resolvConf: /run/systemd/resolve/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

sudo cat /run/systemd/resolve/resolv.conf

# This file is managed by systemd-resolved(8). Do not edit.

#

# Third party programs must not access this file directly, but

# only through the symlink at /etc/resolv.conf. To manage

# resolv.conf(5) in a different way, replace the symlink by a

# static file or a different symlink.

nameserver 8.8.8.8

nameserver 114.114.114.114

sudo cat /etc/resolv.conf

# Dynamic resolv.conf(5) file for glibc resolver(3) generated by resolvconf(8)

# DO NOT EDIT THIS FILE BY HAND -- YOUR CHANGES WILL BE OVERWRITTEN

nameserver 8.8.8.8

nameserver 114.114.114.114

我的集群情况

sudo kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

node2 Ready <none> 9d v1.19.3 192.168.10.11 <none> Ubuntu 16.04.7 LTS 4.15.0-142-generic docker://19.3.10

master Ready master 9d v1.19.3 192.168.10.139 <none> Ubuntu 16.04.7 LTS 4.15.0-142-generic docker://19.3.10

node1 Ready <none> 9d v1.19.3 192.168.10.201 <none> Ubuntu 16.04.6 LTS 4.15.0-142-generic docker://19.3.10

我在master节点运行的结果是:

sudo calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+-------------------+-------+------------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+-------------------+-------+------------+-------------+

| 192.168.10.201 | node-to-node mesh | up | 2022-05-09 | Established |

| 192.168.10.11 | node-to-node mesh | up | 2022-05-12 | Established |

+----------------+-------------------+-------+------------+-------------+

IPv6 BGP status

No IPv6 peers found.

node节点运行的结果是:

sudo calicoctl node status

Calico process is running.

IPv4 BGP status

+----------------+-------------------+-------+------------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+----------------+-------------------+-------+------------+-------------+

| 192.168.10.139 | node-to-node mesh | up | 2022-05-12 | Established |

| 192.168.10.201 | node-to-node mesh | up | 2022-05-12 | Established |

+----------------+-------------------+-------+------------+-------------+

IPv6 BGP status

No IPv6 peers found.

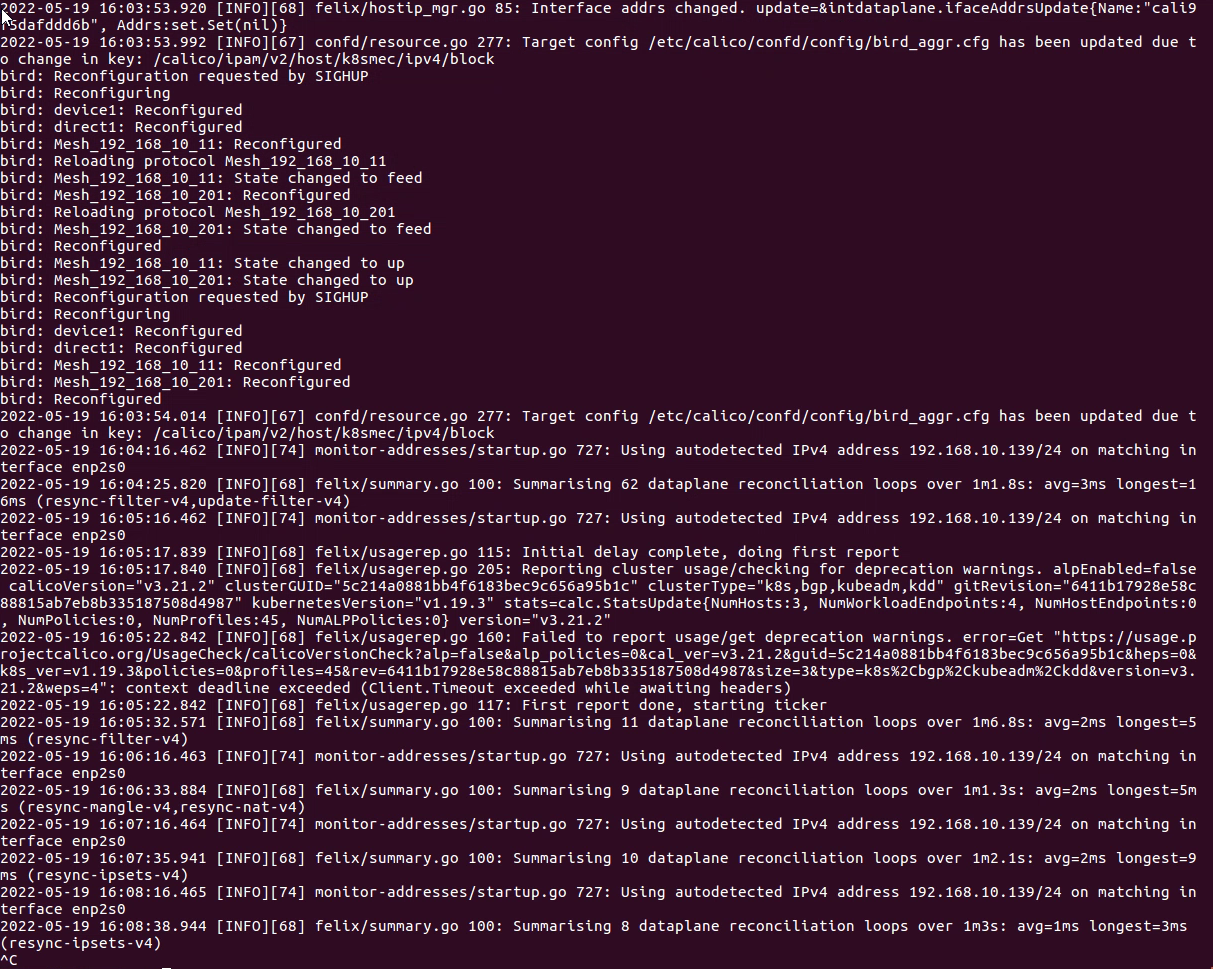

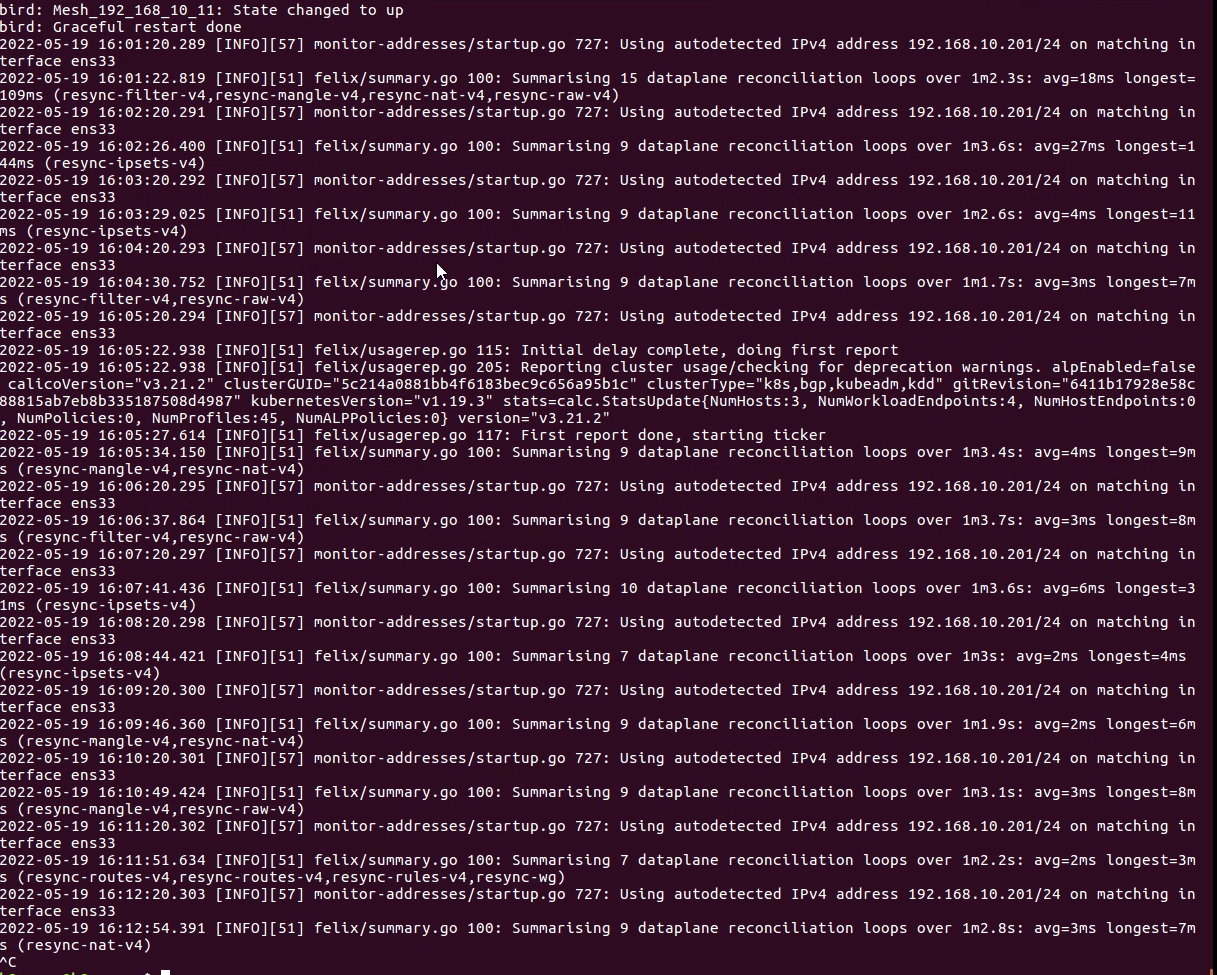

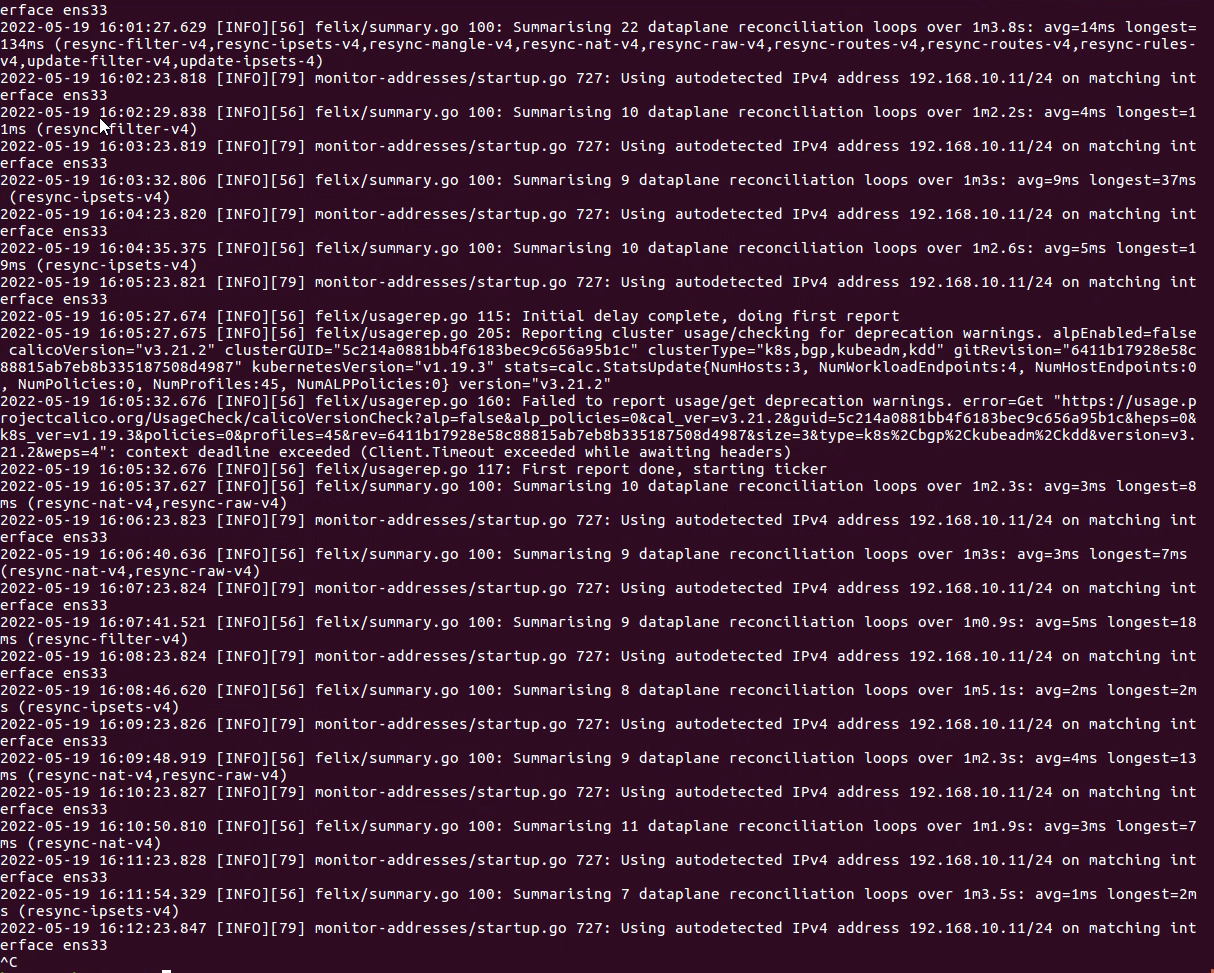

以上分别是master node1 node2的calico日志截图,没有看到问题所在

我查看kube-proxy的日志,我看到了一个w,难道是这里的问题,我暂时没有找到这个报错的解决办法,老师能帮我看一下吗

k8s-mec@k8smec:~$ sudo kubectl logs -f kube-proxy-fwpck -n kube-system

I0509 07:15:49.186836 1 node.go:136] Successfully retrieved node IP: 192.168.10.139

I0509 07:15:49.187344 1 server_others.go:111] kube-proxy node IP is an IPv4 address (192.168.10.139), assume IPv4 operation

W0509 07:15:52.858142 1 server_others.go:579] Unknown proxy mode "", assuming iptables proxy

I0509 07:15:52.858279 1 server_others.go:186] Using iptables Proxier.

I0509 07:15:52.859119 1 server.go:650] Version: v1.19.3

I0509 07:15:52.859767 1 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_max' to 131072

I0509 07:15:52.859820 1 conntrack.go:52] Setting nf_conntrack_max to 131072

I0509 07:15:52.859927 1 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_tcp_timeout_established' to 86400

I0509 07:15:52.860019 1 conntrack.go:100] Set sysctl 'net/netfilter/nf_conntrack_tcp_timeout_close_wait' to 3600

I0509 07:15:52.861412 1 config.go:315] Starting service config controller

I0509 07:15:52.861442 1 shared_informer.go:240] Waiting for caches to sync for service config

I0509 07:15:52.861446 1 config.go:224] Starting endpoint slice config controller

I0509 07:15:52.861468 1 shared_informer.go:240] Waiting for caches to sync for endpoint slice config

I0509 07:15:52.961679 1 shared_informer.go:247] Caches are synced for service config

I0509 07:15:52.961706 1 shared_informer.go:247] Caches are synced for endpoint slice config

I0518 08:56:57.879820 1 trace.go:205] Trace[1789821911]: "iptables Monitor CANARY check" (18-May-2022 08:56:52.868) (total time: 5009ms):

Trace[1789821911]: [5.009166574s] [5.009166574s] END

W0518 08:56:57.879839 1 iptables.go:568] Could not check for iptables canary mangle/KUBE-PROXY-CANARY: exit status 4

#########

老师我的calico的日志放到我的博客里了因为太多,在下面超链接,可以帮我看一下嘛,我没有看出问题。

1705

收起