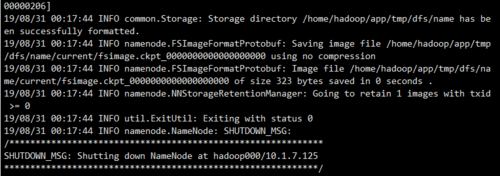

datanode无法启动

2018-11-25 00:31:24,717 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: registered UNIX signal handlers for [TERM, HUP, INT]

2018-11-25 00:31:25,369 INFO org.apache.hadoop.metrics2.impl.MetricsConfig: loaded properties from hadoop-metrics2.properties

2018-11-25 00:31:25,439 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Scheduled snapshot period at 10 second(s).

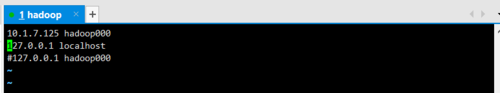

2018-11-25 00:31:25,448 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Configured hostname is localhost

2018-11-25 00:31:25,452 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Starting DataNode with maxLockedMemory = 0

2018-11-25 00:31:25,480 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Opened streaming server at /0.0.0.0:50010

2018-11-25 00:31:25,482 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Balancing bandwith is 10485760 bytes/s

2018-11-25 00:31:26,021 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: supergroup = supergroup

2018-11-25 00:31:26,159 INFO org.apache.hadoop.ipc.Server: Starting Socket Reader #1 for port 50020

2018-11-25 00:31:26,365 INFO org.apache.hadoop.ipc.Server: IPC Server Responder: starting

2018-11-25 00:46:09,109 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: IOException in offerService

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:791)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:764)

at org.apache.hadoop.ipc.Client.call(Client.java:1508)

at org.apache.hadoop.ipc.Client.call(Client.java:1441)

STARTUP_MSG: java = 1.8.0_91

************************************************************/

2018-11-25 00:46:50,590 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: registered UNIX signal handlers for [TERM, HUP, INT]

2018-11-25 00:46:51,234 INFO org.apache.hadoop.metrics2.impl.MetricsConfig: loaded properties from hadoop-metrics2.properties

2018-11-25 00:46:51,302 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Scheduled snapshot period at 10 second(s).

2018-11-25 00:46:51,311 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Configured hostname is hadoop000

2018-11-25 00:46:51,315 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Starting DataNode with maxLockedMemory = 0

2018-11-25 00:46:51,335 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Opened streaming server at /0.0.0.0:50010

2018-11-25 00:46:51,337 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Balancing bandwith is 10485760 bytes/s

2018-11-25 00:46:51,938 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: supergroup = supergroup

2018-11-25 00:46:52,096 INFO org.apache.hadoop.ipc.Server: Starting Socket Reader #1 for port 50020

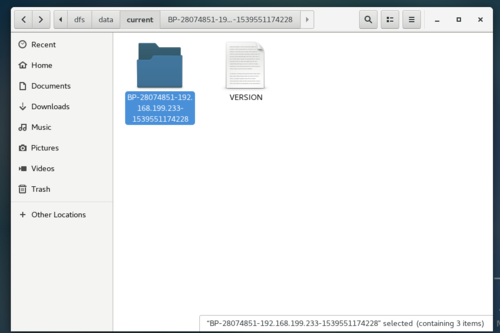

2018-11-25 01:00:24,965 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetAsyncDiskService: Deleted BP-28074851-192.168.199.233-1539551174228 blk_1073742285_1461 file /home/hadoop/app/tmp/dfs/data/current/BP-28074851-192.168.199.233-1539551174228/current/finalized/subdir0/subdir1/blk_1073742285

2018-11-25 01:00:24,966 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetAsyncDiskService: Deleted BP-28074851-192.168.199.233-1539551174228 blk_1073742286_1462 file /home/hadoop/app/tmp/dfs/data/current/BP-28074851-192.168.199.233-1539551174228/current/finalized/subdir0/subdir1/blk_1073742286

2018-11-25 01:00:24,966 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetAsyncDiskService: Deleted BP-28074851-192.168.199.233-1539551174228 blk_1073742287_1463 file /home/hadoop/app/tmp/dfs/data/current/BP-28074851-192.168.199.233-1539551174228/current/finalized/subdir0/subdir1/blk_1073742287

2018-11-25 01:13:25,281 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: IOException in offerService

2018-11-25 01:13:25,281 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: IOException in offerService

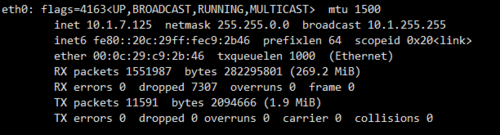

java.io.EOFException: End of File Exception between local host is: "hadoop000/192.168.199.233"; destination host is: "hadoop000":8020; : java.io.EOFException; For more details see: http://wiki.apache.org/hadoop/EOFException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:791)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:764)

at org.apache.hadoop.ipc.Client.call(Client.java:1508)

at org.apache.hadoop.ipc.Client.call(Client.java:1441)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:230)

at com.sun.proxy.$Proxy16.sendHeartbeat(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.DatanodeProtocolClientSideTranslatorPB.sendHeartbeat(DatanodeProtocolClientSideTranslatorPB.java:154)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.sendHeartBeat(BPServiceActor.java:406)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.offerService(BPServiceActor.java:509)

老师这个怎么解决啊?

1570

收起