分类器运行时出错,(最新已经解决,方法在问题下面)

当我运行随机森林分类器时报下面的错误

// 随机森林分类器

val rf = new RandomForestClassifier()

rf.setFeaturesCol("features").setLabelCol("Survived")

val model = rf.fit(tmp4) //这一步报错Exception in thread "Executor task launch worker-0" java.lang.OutOfMemoryError: PermGen space at java.lang.ClassLoader.defineClass1(Native Method) at java.lang.ClassLoader.defineClass(ClassLoader.java:800) at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142) at java.net.URLClassLoader.defineClass(URLClassLoader.java:449) at java.net.URLClassLoader.access$100(URLClassLoader.java:71) at java.net.URLClassLoader$1.run(URLClassLoader.java:361) at java.net.URLClassLoader$1.run(URLClassLoader.java:355) at java.security.AccessController.doPrivileged(Native Method) at java.net.URLClassLoader.findClass(URLClassLoader.java:354) at java.lang.ClassLoader.loadClass(ClassLoader.java:425) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308) at java.lang.ClassLoader.loadClass(ClassLoader.java:358) at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:387) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:745)

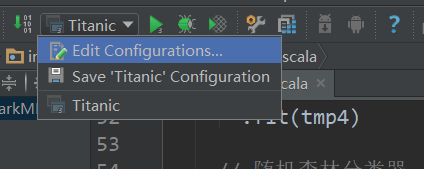

Google以后,发现是分配的内存不足引起的异常,需要在 configuration里面添加VM option

在VM option中添加如下:

-server -Xms1024m -Xmx2048m -XX:MaxPermSize=512m -XX:ReservedCodeCacheSize=512m

但是我后来只留下-XX:MaxPermSize=512m 也是可以的

608

收起

正在回答

1回答

相似问题

登录后可查看更多问答,登录/注册